Geological Carbon Storage

Introduction

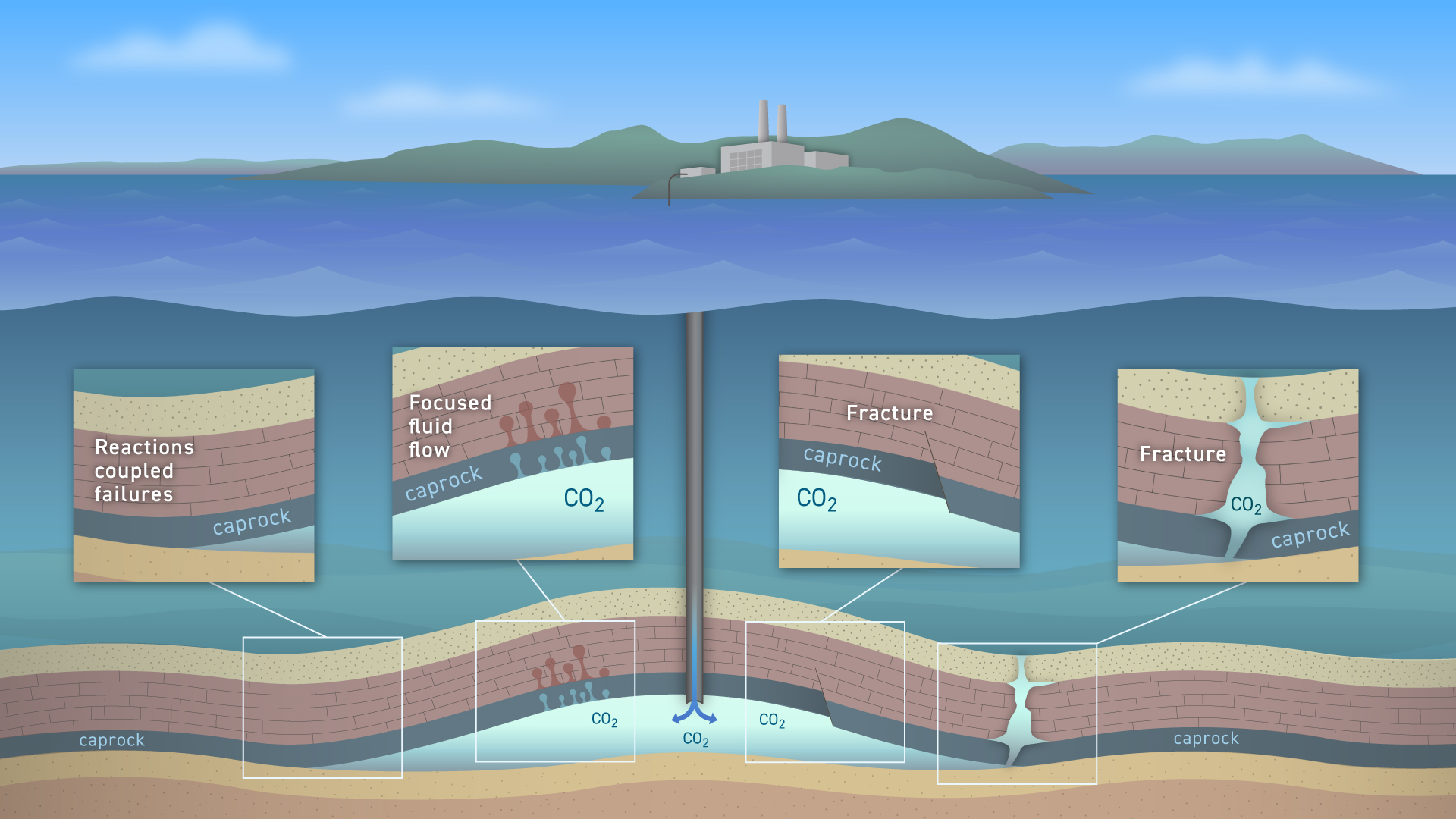

Geological Carbon Storage (GCS) is an essential technology to help mitigate risks of climate change. To meet the goals of the Paris Agreement (Rockström et al. 2017), it is estimated that 550 million tons (Mt) of CO2 must be sequestered per year by 2030 and 5,500 Mt CO2 by 2050, which corresponds to a need of approximately 12,000 CO2 injection sites globally by 2050 P. Ringrose (2020). While GCS is a promising technology to inject large amounts of supercritical CO2 into rock formations for long-term storage, there may be certain risks associated with this technology that call for reassurance of the public, regulators, and other stakeholders of its safety. Even for well-designed CO2 injection projects with accurately established baselines, there remains a risk that the CO2 plume leaves the storage complex via e.g. focused fluid flows, porosity collapse, or changes in fracture networks. An illustration of this is shown in Figure 1 below.

At the Seismic Laboratory for Imaging and Modeling (SLIM), and as part of the industry partners program ML4Seismic, we are addressing these risks by embracing recent developments in simulation-based Bayesian inference (Cranmer, Brehmer, and Louppe 2020)—i.e., the task of deriving statistical information from a system based on in silico simulations—that allows us to develop low-cost, scalable, high-fidelity and uncertainty-aware seismic monitoring solutions for GCS. Our envisioned approach consists of the following components:

Simulation-based monitoring design—a capability to generate realistic time-lapse data in response to CO2 injection in large strongly heterogeneous reservoirs, e.g. saline aquifers;

Seismic monitoring with the joint recovery model—an inversion framework capable of producing high-fidelity time-lapse images of CO2 plumes from sparse non-replicated time-lapse data collected in response to CO2 injections;

Deep neural network classifiers for CO2 leakage detection—a Class Activation Mapping (CAM) framework to identify areas of possible CO2 leakage from imaged time-lapse seismic data;

Surrogate end-to-end inversion—a coupled inversion framework that produces estimates for the permeability from time-lapse seismic data using Fourier neural operators as surrogates for two-phase flow;

Scalable parallel domain-decomposed Fourier neural operators—high-performance computing implementation of 3D Fourier neural operators;

Uncertainty-aware Digital Twin for Geological Carbon Storage—a data-assimilation framework based on simulation-based Bayesian and sequential Bayesian inference capable of rapidly producing high-fidelity CO2 plume forecasts that are consistent with observed time-lapse seismic data;

Simulation-based monitoring design

While various monitoring modalities exist to track the behavior of CO2 plumes to ensure safe operations and compliance with regulatory requirements, active 3D time-lapse seismic monitoring has proven superior but costly. At SLIM, we aim to reduce the operating costs by optimizing acquisition design and to answer questions such as

- How often and when (early and/or late in a GCS projects) do seismic surveys need to be performed to mitigate long-term risks?

- How can the sensitivity of seismic monitoring systems be improved while keeping costs down?

- To what degree do time-lapse surveys have to be replicated to achieve high degrees of repeatability?

To answer these questions and to help drive innovations in seismic monitoring acquisition design and imaging, we are developing an open-source software platform Seis4CCS.jl that allows users to perform simulation-based monitoring design and test novel time-lapse acquisition and imaging technologies in silico at scale. An example of our simulation-based framework is included in Animation 1. This example is based on the Compass model (Jones et al. 2012). This model, which is derived from real imaged 3D seismic and well-log data, is representative of the geology of saline aquifers in the southwest corner of the North Sea. To create realistic CO2 plumes, we convert this synthetic seismic proxy model of the compressional wavespeed and density to a proxy model of the porosity and permeability. For this conversion, we make use of empirical relationships derived in (Klimentos 1991).

As outlined in the Strategic UK CCS Storage Appraisal Project report, the stratigraphic sections in this model can be roughly divided into three main sections, namely:

- The reservoir, consisting of highly porous (average 22%) and permeable ( > 170 mD) Bunter Sandstone of about 300 − 500 m thick (red area in the second plot of the top row of Animation 1);

- The primary seal, (permeability 10−4 − 10−2 mD) made of the Rot Halite Member, which is 50 m thick (black layer in the second plot of the top row of Animation 1);

- The secondary seal, made of the Haisborough group, which is > 300 m thick and consists of low-permeability (15 − 18 mD) mudstone (blue area in the second plot of the top row of Animation 1).

Given these proxy models of fluid-flow properties, we simulate the injection of supercritical CO2 using the open-source software FwiFlow.jl (D. Li et al. 2020). This code simulates the growth of CO2 plumes by solving the subsurface two-phase flow equations. To mimic CO2 leakage due to a pressure induced opening of a fault, we increase the permeability at a given location when the induced pressure reaches a critical threshold. Animation 1 shows a movie of the plume development in a leakage scenario.

After fluid-flow simulation, the time-varying CO2 concentration is converted to changes in the compressional wavespeed and density via the patchy saturation model (Avseth, Mukerji, and Mavko 2010). These time-lapse changes in seismic properties are localized at the plume location and are then used to generate synthetic time-lapse seismic datasets with and without leakage. These datasets then serve as input to the joint recovery model (JRM) and neural network based leakage classifiers. Additionally, these simulated seismic datasets will become part of a series of benchmarks designed to test and compare the performance of different approaches to seismic monitoring.

Seismic monitoring with the joint recovery model:

Time-lapse seismic monitoring is widely used to monitor the CO2 dynamics in GCS projects (Lumley 2001; Chadwick et al. 2010). Despite its wide use, seismic monitoring is challenging because time-lapse differences are often relatively small in extent and amplitude, which implies they call for high-fidelity (and therefore costly) imaging of the baseline and monitor surveys. To avoid misidentification of CO2 plumes due to artifacts, the recovered images also need to be highly repeatable in order for subtle CO2-induced time-lapse changes to be detected, e.g. by computing differences between the baseline and monitor surveys or between the monitor surveys themselves.

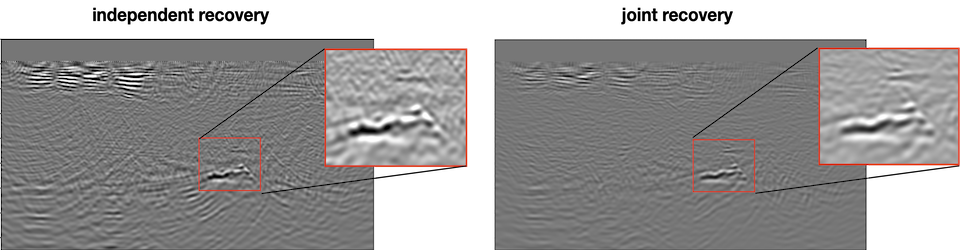

To improve the repeatability of the recovered images without insisting on costly and challenging replication of the monitoring surveys, use is made of the joint recovery model (JRM) (Oghenekohwo et al. 2017; Wason, Oghenekohwo, and Herrmann 2017). Contrary to conventional approaches, where time-lapse seismic datasets are imaged separately, the JRM inverts for the common component, which captures information shared amongst different surveys, and for time-lapse innovations with respect to this common component. This novel inversion approach has been deployed successfully to several time-lapse recovery problems, including but not limited to wavefield reconstruction from sparse simultaneous source acquisition (see Acquisition tab) (Oghenekohwo et al. 2017; Wason, Oghenekohwo, and Herrmann 2017; Oghenekohwo and Herrmann 2017), imaging, full-waveform inversion (Oghenekohwo 2017; Z. Yin, Louboutin, and Herrmann 2021), and field data denoising (Wei et al. 2018).

Mathematically, the JRM for nv different monitoring surveys involves inverting the following matrix:

$$ \mathbf{A} = \begin{bmatrix} \frac{1}{\gamma} \nabla\mathcal{F}_1(\mathbf{\overline{m}}_1) & \nabla\mathcal{F}_1(\mathbf{\overline{m}}_1) & & \\ \vdots & & \ddots & \\ \frac{1}{\gamma} \nabla\mathcal{F}_{n_v}(\mathbf{\overline{m}}_{n_v}) & & & \nabla\mathcal{F}_{n_v}(\mathbf{\overline{m}}_{n_v}) \end{bmatrix}, \qquad(1)$$

where each ∇ℱk, k = 1⋯nv denotes the linearized Born modeling operator for a given vintage, parameterized by the corresponding background model, $\mathbf{\overline{m}}_k$. This matrix relates the common component (z0) and innovation components (zk), collected in vector z = [z0, z1, ⋯, znv]⊤, to the linearized time-lapse datasets collected in vector δd = [δd1, ⋯, δdnv]⊤. This linear system of equations can be inverted via the linearized Bregman method (W. Yin et al. 2008; Witte et al. 2019; Yang et al. 2020). After inverting this system, the seismic monitoring images for each vintage xk are computed by summing the common and innovation components—i.e., $\frac{1}{\gamma} \mathbf{z}_0 + \mathbf{z}_k, k=1\cdots n_v$.

The seismic images recovered by JRM are more repeatable compared to the images recovered by independent imaging, yielding lower NRMS values (Kragh and Christie 2002). Check this SEG 2021 abstract and the GitHub Repository for more information.

Deep neural network classifiers for CO2 leakage detection

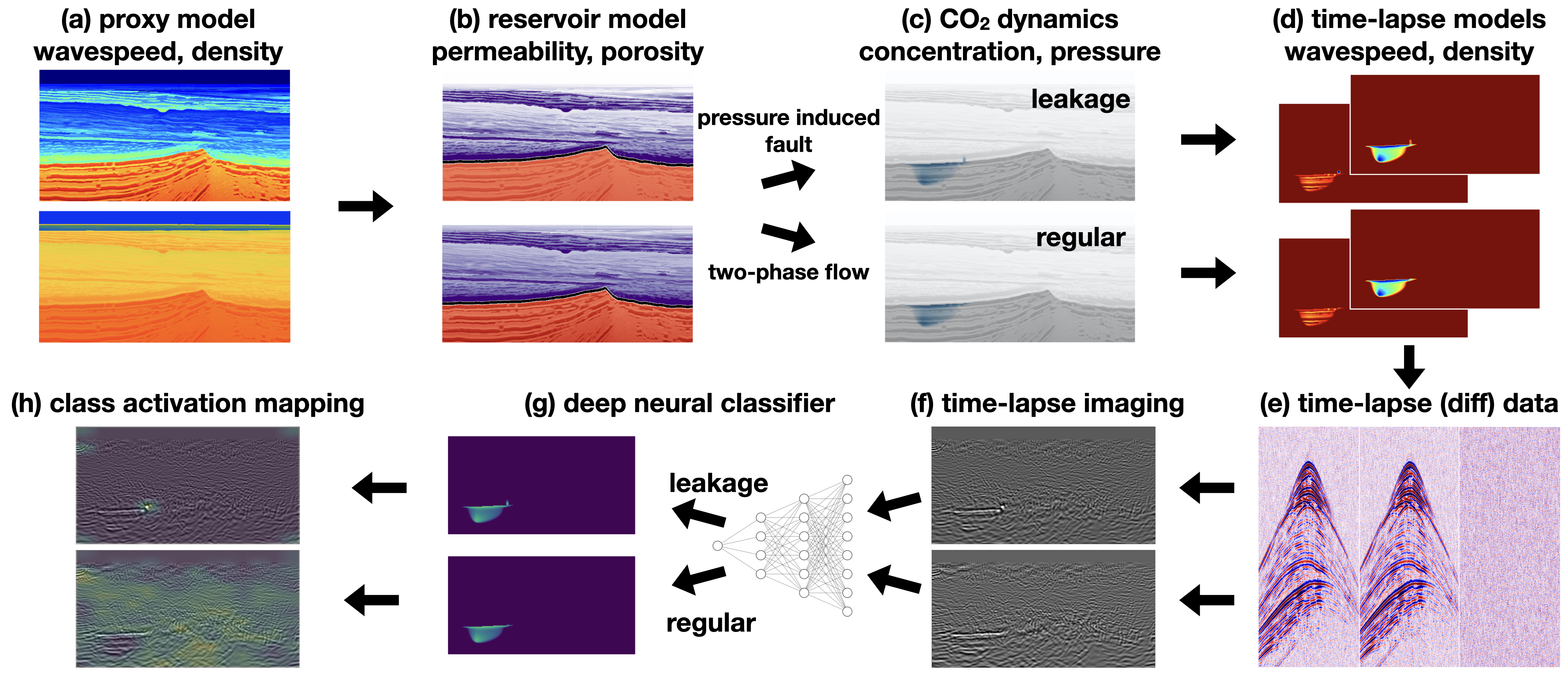

SLIM’s ultimate goal is to detect potential CO2 leakage out of the storage complex from time-lapse seismic monitoring data. To this end, we propose a deep-learning binary classification methodology to automatically detect potential CO2 leakage due to pressure-induced activation of pre-existing faults from time-lapse seismic data. Aside from determining whether the CO2 plume develops regularly or not (i.e. sealed or leakage), we also employ Class Activation Mapping (CAM) methods (Zhou et al. 2016) to generate saliency maps to visualize areas in the time-lapse seismic image that are crucial to the model classification result. Compared to our earlier work on Simulation-based monitoring design and Seismic monitoring with the joint recovery model, this classification process required an additional step as illustrated in Figure #fig-cam-framework below).

For comparison, we use 4 different types of classification network models, including ResNet34 (He et al. 2016), VGGNet16 (Simonyan and Zisserman 2014), Vision Transformer (ViT) (Vaswani et al. 2017) and Shifted Window Transformer (Swin) (Liu et al. 2021). Predicted accuracy on unseen seismic images obtained from time-lapse seismic datasets are included in table below while Figure Figure 4 contains the CAM-based saliency maps.

| Model | Accuracy | Precision | Recall | F1 | ROC-AUC |

|---|---|---|---|---|---|

| VGG16 | 0.920 ± 0.089 | 0.941 ± 0.133 | 0.921 ± 0.081 | 0.927 ± 0.075 | 0.920 ± 0.076 |

| ResNet34 | 0.948 ± 0.020 | 0.982 ± 0.028 | 0.928 ± 0.044 | 0.948 ± 0.040 | 0.967 ± 0.019 |

| ViT | 0.857 ± 0.018 | 0.910 ± 0.102 | 0.820 ± 0.098 | 0.859 ± 0.036 | 0.923 ± 0.023 |

| Swin | 0.836 ± 0.036 | 0.881 ± 0.108 | 0.818 ± 0.078 | 0.841 ± 0.076 | 0.909 ± 0.007 |

From these CAM maps, we observe that the ViT structure with Score-CAM has the narrowest highlighted region that shows the CO2 leakage. These classification results and CAM saliency maps can be used by practitioners to alert the early stages of potentially anomalous CO2 plume development during GCS projects. More information can be found in this 2022 manuscript.

Surrogate end-to-end inversion

While time-lapse monitoring using the joint recovery model yields seismic images with a high degree of repeatability without insisting on replication of the time-lapse surveys, it does not provide direct access to the fluid-flow properties, i.e., the permeability and porosity distribution within the reservoir. Improving our understanding of the spatial distribution of permeability forms an essential component of measurement, monitoring and verification (MMV) of GCS projects that call for accurate modeling of CO2 plume dynamics. To gain access to the spatial permeability distribution from observed time-lapse seismic data, we follow recent work by D. Li et al. (2020) where the seismic forward model is coupled to the physics of two-phase flow. This coupling can then be used to invert for the intrinsic permeability of the reservoir.

In an effort to improve the inversion results and prepare for integration with uncertainty-aware problem formulations, recent developments in surrogate modeling via deep neural networks are employed in a two-pronged approach wherein both the physics of two-phase flow and the statistical distribution of permeability are captured by a neural network. Following Z. Li et al. (2020), we train a Fourier neural operator (FNO) to learn time-varying solutions of the two-phase flow equations for corresponding spatially varying samples of the permeability drawn from a specific distribution. This training process learns the mapping K ↦ 𝒮θ(K) where K represents the spatially varying permeability and 𝒮θ(⋅) the trained FNO with network weights θ. After converting the CO2 concentration to changes in the compressional wavespeeds and seismic forward modeling (via the operators ℛ and ℱ), this mapping can be used to invert for the permeability from time-lapse seismic data by minimizing

$$ \underset{\mathbf{z}}{\operatorname{minimize}} \quad \frac{1}{2}\|\mathcal{F}\circ\mathcal{R}\circ\mathcal{S}_{\boldsymbol{\theta}}\left(\mathbf{K}\right)-\mathbf{d}\|_2^2 \quad \text{subject to}\quad \mathbf{K}=\mathcal{G}_{\mathbf{w}}(\mathbf{z}). \qquad(2)$$

In this expression, 𝒢w represents a normalizing flow trained to generate spatially varying samples from the permeability distribution given the latent variable z.

Because 𝒢w is trained to generate samples from the permeability distribution, adding it as a constraint acts as a regularizer on the ill-posed inversion problem for the spatial permeability distribution. Figure #fig-end2end-result contains an example of end-to-end inversion by solving the optimization problem defined in #couple. Because of buoyancy effects, the injected CO2 plume (see also Figure #fig-end2end-framework (a)) does not occupy the deeper part of the model, causing errors in the estimated permeability for deeper parts of the model (see the top row of Figure #fig-end2end-result). Despite these errors, the predicted CO2 plumes are quite accurate (see Figure #fig-end2end-framework (b)). Furthermore, the inverted permeability can be used to forecast the growth of CO2 plumes in the future at a near-zero additional cost via forward evaluation of the FNO. We observe that the forecast is relatively accurate and catches the global behavior of the plume even though no seismic monitoring data is available (see Figure #fig-end2end-framework (c)). More information can be found in the SEG 2022 abstract and FNO4CO2.jl.

Scalable parallel domain-decomposed Fourier neural operators

The efficacy of the aforementioned end-to-end inversion framework on large-scale CO2 monitoring hinges upon the capability to scale FNOs. To fight the curse of dimensionality, we propose a model-parallel version of FNOs based on domain-decomposition of both the input data and the network weights (Grady II et al. 2022). A sketch of the distributed FNO block structure is shown in Figure #fig-dfno. In collaboration with Microsoft Research and Extreme Scale Solutions, we demonstrate that our model-parallel FNO is able to predict time-varying PDE solutions of over 3.2 billion variables on Summit using up to 768 GPUs. An example of training a distributed FNO on the Azure cloud for simulating multiphase CO2 dynamics in the Earth’s subsurface is shown below. This technology enables practitioners to deploy surrogate end-to-end inversion to monitor realistic 3D reservoirs at high resolutions. More information can be found under Machine Learning, at this GitHub Repository, and in this 2022 manuscript.

The animation below shows the time evolution of 3D CO2 concentration (generated by a pre-trained FNO) on a model derived from Sleipner.

Uncertainty-aware Digital Twin for Geological Carbon Storage

In collaboration with professor Edmond Chow and with support from the National Sciences Foundation, we have started work on the development of an uncertainty-aware “Digital Twin” for seismic monitoring of GCS. This Digital Twin extends our work on Bayesian Inference with (conditional) Normalizing Flows (NFs) described under Machine Learning. NFs are deep generative networks capable of approximating (conditional) probability distributions from samples generated from an invertible mapping from a latent distribution to a data distribution.

Given this ability to train NFs to approximate the conditional posterior distribution of the state of CO2 plume, the proposed approach, outlined in Animation 2, works as follows: Suppose at some timestep we know how to generate samples from the probability distribution for the CO2 plume conditioned on observed time-lapse data for the current state. Given these samples, we prepare the neural network for the next timestep by producing samples for the state of the CO2 plume at that timestep via in silico simulations that capture our current understanding of the CO2 dynamics. By coupling these time-advanced CO2 plumes to simulated synthetic time-lapse seismic data, training pairs (state of CO2 plume and seismic data) are created to update the conditional NF, which learns by example. With the updated NF, samples from the updated probability distribution can be drawn when the new time-lapse field data arrives at the next timestep. By following this procedure recursively, knowledge captured by the NF—i.e. the posterior distribution for the state of the CO2 plume given the observed time-lapse data—remains current throughout CO2 injection projects. During the Training Phase, the NF inside the Digital Twin is trained to approximate the posterior distribution for the next time-lapse timestep. Samples for the state of the CO2 plume conditioned by the observed time-lapse field data are computed during the Inference Phase.

The design of our Digital Twin overlaps with five out of nine featured motives in a recent paper on Simulation Intelligence (Lavin et al. 2021)—(i) surrogate modeling of CO2 dynamics; (ii) stochastic programming to generate sealed and leaking CO2 plumes; (iii) simulation-based inference to approximate the posterior distribution of CO2 conditioned by observed seismic data; (iv) differentiable programming to couple neural network surrogates with wave simulations and their hand-derived sensitivities; (v) machine programming to automatically generate highly-optimized code from the mathematical abstractions of Devito—to carry out symbolic finite difference computations, JUDI—to carry out inversion with Devito in Julia; InvertibleNetworks—to build high-performance building blocks for invertible neural networks in the Julia programming language; and integration with machine learning via ChainRules.jl.

Figure 8 illustrates the “lifecycle” of our envisioned Digital Shadow for the first timestep, k = 1. A Digital Shadow corresponds to a Digital Twin but misses the ability to make decisions and optimize operations.