|

|

|

|

Random sampling: new insights into the reconstruction of coarsely-sampled wavefields |

| (2) |

|

|---|

|

phasediagREG,phasediagIRREG

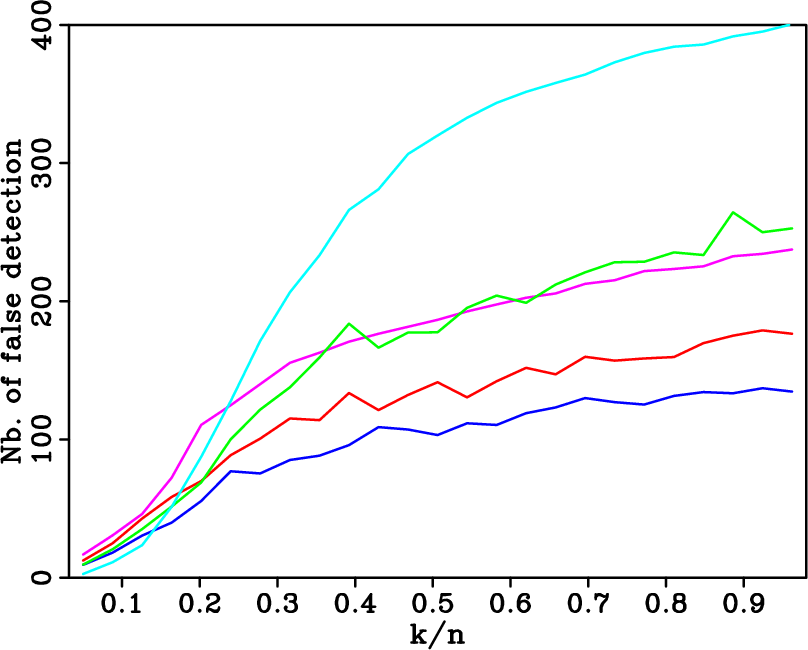

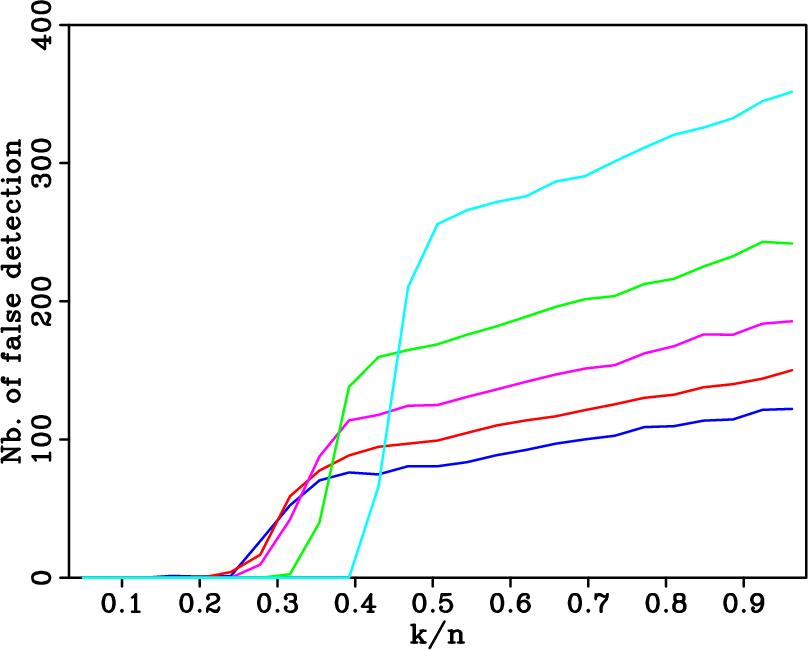

Figure 2. Average recovery curves from sub-Nyquist rate samplings using nonlinear sparsity-promoting optimization. Average recovery curves (a) from regular sub-samplings by |

|

|

|

|

|

|

Random sampling: new insights into the reconstruction of coarsely-sampled wavefields |