Abstract

Simultaneous source marine acquisition has been recognized as an economic way of improving spatial sampling and speedup acquisition time, where a single- (or multiple-) source vessel fires at jittered source locations and time instances. Consequently, the acquired simultaneous data volume is processed to separate the overlapping shot records resulting in densely sampled data volume. It has been shown in the past that the simultaneous source acquisition design and source separation process can be setup as a compressed sensing problem, where conventional seismic data is reconstructed from simultaneous data via a sparsity-promoting optimization formulation. While the recovery quality of separated data is reasonably well, the recovery process can be computationally expensive due to transform-domain redundancy. In this paper, we present a computationally tractable rank-minimization algorithm to separate simultaneous data volumes. The proposed algorithm is suitable for large-scale seismic data, since it avoids singular-value decompositions and uses a low-rank based factorized formulation instead. Results are illustrated for simulations of simultaneous time-jittered continuous recording for a 3D ocean-bottom cable survey.

Introduction

Simultaneous source marine acquisition mitigates the challenges posed by conventional marine acquisition in terms of sampling and survey efficiency, since more than one shot can be fired at the same time (Beasley et al., 1998; Kok and Gillespie, 2002; Berkhout, 2008; Beasley, 2008; Hampson et al., 2008). The final objective of source separation is to get interference-free shot records. Wason and Herrmann (2013) have shown that the challenge of separating simultaneous data can be addressed through a combination of tailored single- (or multiple-) source simultaneous acquisition design and curvelet-based sparsity-promoting recovery. The idea is to design a pragmatic time-jittered marine acquisition scheme where acquisition time is reduced and spatial sampling is improved by separating overlapping shot records and interpolating jittered coarse source locations to fine source sampling grid. While the proposed sparsity-promoting approach recovers densely sampled conventional data reasonably well, it poses computational challenges since curvelet-based sparsity-promoting methods can become computationally intractable—in terms of speed and memory storage—especially for large-scale 5D seismic data volumes.

Recently, nuclear-norm minimization based methods have shown the potential to overcome the computational bottleneck (Kumar et al., 2015a), hence, these methods are successfully used for source separation (Maraschini et al., 2012; Cheng and Sacchi, 2013; Kumar et al., 2015b). The general idea is that conventional seismic data can be well approximated in some rank-revealing transform domain where the data exhibit low-rank structure or fast decay of singular values. Therefore, in order to use nuclear-norm minimization based algorithms for source separation, the acquisition design should increase the rank or slow the decay of the singular values. In Kumar et al. (2015b) we used nuclear-norm minimization formulation to separate simultaneous data acquired from an over/under acquisition design, where the separation is performed on each monochromatic data matrix independently. However, by virtue of the design of the simultaneous time-jittered marine acquisition we formulate a nuclear-norm minimization formulation that works on the temporal-frequency domain—i.e., using all monochromatic data matrices together. One of the computational bottlenecks of working with the nuclear-norm minimization formulation is the computation of singular values. Therefore, in this paper we combine the modified nuclear-norm minimization approach with the factorization approach recently developed by Lee et al. (2010). The experimental results on a synthetic 5D data set demonstrate successful implementation of the proposed methodology.

Methodology

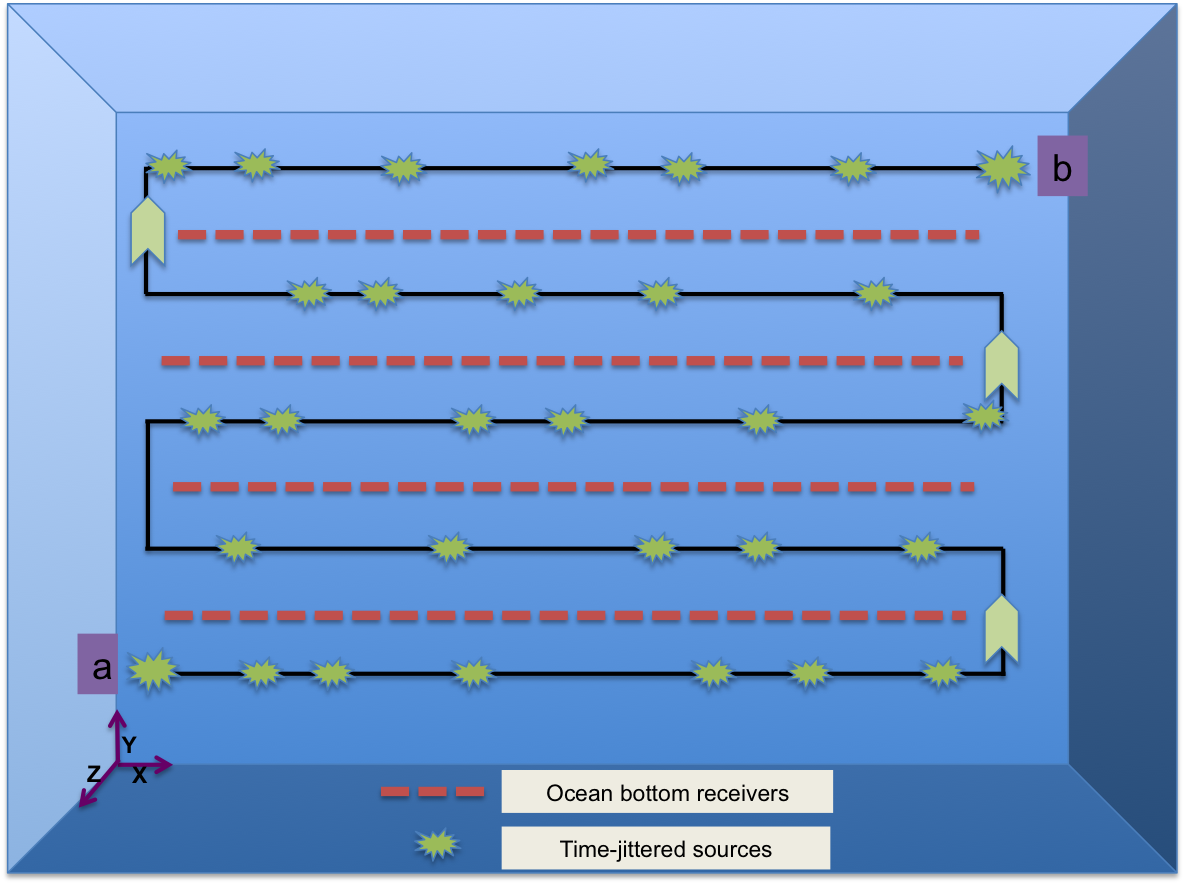

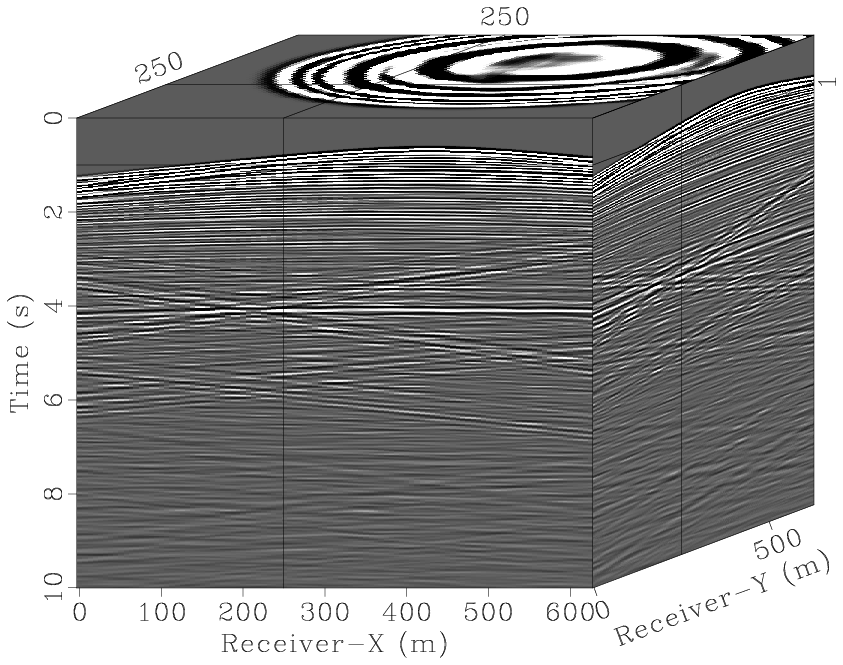

Simultaneous source separation problem can be perceived as a rank-minimization problem. In this paper, we follow the time-jittered marine acquisition setting proposed by Wason and Herrmann (2013), where a single source vessel sails across an ocean-bottom array firing two airgun arrays at jittered source locations and time instances with receivers recording continuously (Figure 1). This results in a continuous time-jittered simultaneous data volume.

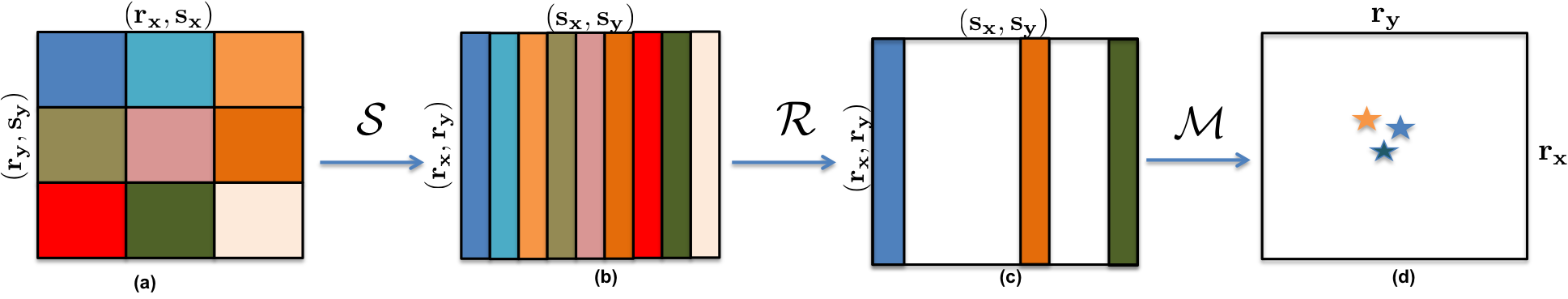

Conventional 5D seismic data volume can be represented as a tensor \(\vector{D}\) \(\in \mathbb{C}^{n_f\times n_{rx} \times n_{sx} \times n_{ry} \times n_{sy}}\), where \((n_{sx}, n_{sy})\) and \((n_{rx}, n_{ry})\) represents number of sources and receivers along \(x, y\) coordinates and \(n_f\) represents number of frequencies. The aim is to recover the data volume \(\vector{D}\) from the continuous time-domain simultaneous data volume \(\vector{b}\) \(\in \mathbb{C}^{n_{T} \times n_{rx} \times n_{ry}}\) by finding a minimum rank solution \(\vector{D}\) that satisfies the system of equations \(\mathcal{A}(\vector{D}) = \vector{b}\). Here, \(\mathcal{A}\) represents a linear sampling-transformation operator, \(n_{T} < (n_t \times n_{sx} \times n_{sy})\) is the total number of time samples in the continuous time-domain simultaneous data volume, \(n_t\) is the total number of time samples in the conventional seismic data. Note that the operator \(\mathcal{A}\) maps \(\vector{D}\) to a lower dimensional simultaneous data volume \(\vector{b}\) since the acquisition process superimposes shot records shifted with respect to their firing times. The sampling-transformation operator \(\mathcal{A}\) is defined as \(\mathcal{A}= {\mathcal{M}\mathcal{R}\mathcal{S}}\), where the operator \(\mathcal{S}\) permutes the tensor coordinates from \((n_{rx}, n_{sx}, n_{ry}, n_{sy})\) (rank-revealing domain, i.e., Figure 2 a) to \((n_{rx}, n_{ry}, n_{sx}, n_{sy})\) (standard acquisition ordering, i.e., Figure 2 b) and its adjoint reverses this permutation. The restriction operator \(\mathcal{R}\) subsamples the conventional data volume at jittered source locations (Figure 2 c), the sampling operator \(\mathcal{M}\) maps the conventional subsampled temporal-frequency domain data to the simultaneous time-domain data (Figure 2 d). Note that Figure 2 d represents a time slice from the continuous (simultaneous) data volume where the stars represent locations of jittered sources in the simultaneous acquisition.

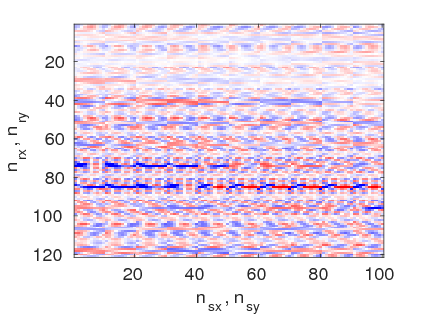

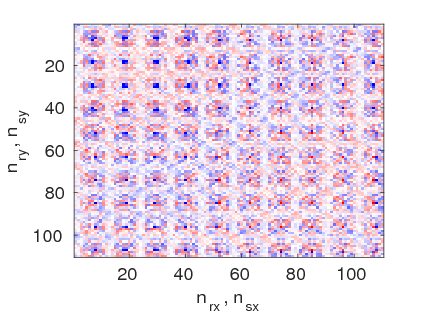

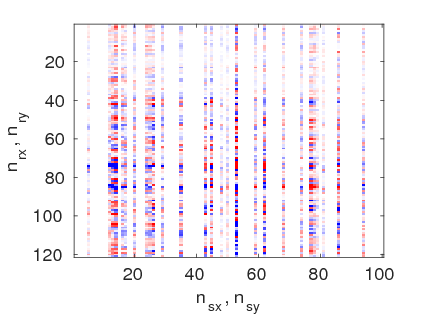

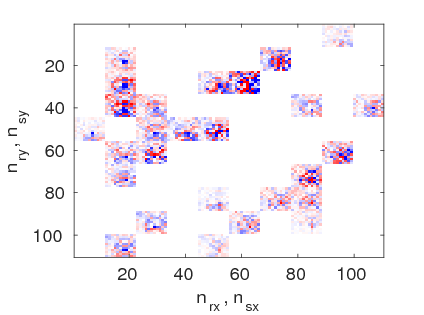

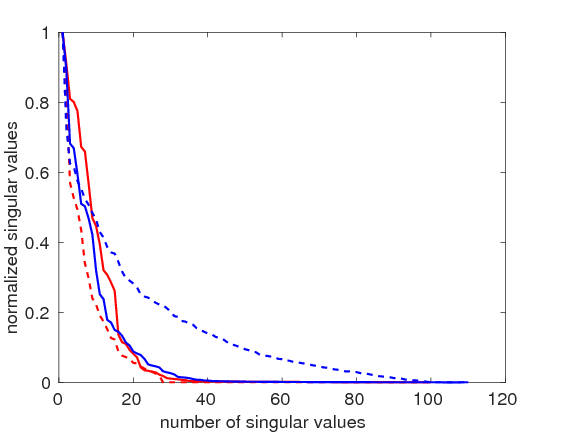

Rank-minimization formulations require that the target data set should exhibit a low-rank structure or fast decay of singular values. Consequently, the sampling-restriction \(\mathcal{MR}\) operation should increase the rank or slow the decay of singular values. As we know, there is no unique notion of rank for tensors, therefore, we can choose the rank of different matricizations of \(\vector{D}\) (Kreimer and Sacchi, 2012) where the idea is to create the matrix \(\vector{D}^{(i)}\) by group the dimensions of \(\vector{D}^{(i)}\) specified by \(i\) and vectorize them along the rows while vectorizing the other dimensions along the columns. In this work, we consider the matricization proposed by Silva and Herrmann (2013), where \(i = (n_{sx}, n_{sy})\)—i.e., placing both source coordinates along the columns (Figure 3a), or \(i = (n_{rx}, n_{sx})\)—i.e., placing receiver-x and source-x coordinates along the columns (Figure 3b). As we see in Figure 3e, the matricization \(i = (n_{sx}, n_{sy})\) has higher rank or slow decay of the singular values (solid red curve) compared to the matricization \(i = (n_{rx}, n_{sx})\) (solid blue curve). The sampling-restriction operator removes random columns in the matricization \(i = (n_{sx}, n_{sy})\) (Figure 3c), as a result the overall singular values decay faster (dotted red curve). This is because missing columns put the singular values to zero, which is opposite to the requirement of rank-minimization algorithms. On the other hand, the sampling-restriction operator removes random blocks in the matricization \(i = (n_{rx}, n_{sx})\) (Figure 3d), hence, slowing down the decay of the singular values (dotted blue curve). This scenario is much closer to the matrix-completion problem (Recht et al. (2010)), where samples are removed at random points in a matrix. Therefore, we address the source separation problem by exploiting low-rank structure in the matricization \(i = (n_{rx}, n_{sx})\).

Since rank-minimization problems are NP hard and therefore computationally intractable, Recht et al. (2010) showed that solutions to rank-minimization problems can be found by solving a nuclear-norm minimization problem. Silva and Herrmann (2013) showed that for seismic data interpolation the sampling operator \(\mathcal{M}\) is separable, hence, data can be interpolated by working on each monochromatic data tensor independently. Since in continuous time-jittered marine acquisition, the sampling operator \(\mathcal{M}\) is nonseparable as it is a combined time-shifting and shot-jittering operator, we can not perform source separation independently over different monochromatic data tensors. Therefore, we formulate the nuclear-norm minimization formulation over the temporal-frequency domain as follows: \[ \begin{equation} \min_{\vector{D}} \sum_{j}^{n_f} \|\vector{D}^{(i)}_{j}\|_* \quad \text{subject to} \quad \|\mathcal{A} (\vector{D}) - \vector{b}\|_2 \leq \epsilon, \label{eqnucsum} \end{equation} \] where \(\sum_{j}^{n_f} \| . \|_* = \sum_{j}^{n_f} \|\boldsymbol{\sigma}_j\|_1\) and \(\boldsymbol{\sigma}_j\) is the vector of singular values for each monochromatic data matricization. One of the main drawbacks of the nuclear-norm minimization problem is that it involves computation of the singular-value decomposition (SVD) of the matrices, which is prohibitively expensive for large-scale seismic data. Therefore, we avoid the direct approach to nuclear-norm minimization problem and follow a factorization-based approach (Rennie and Srebro, 2005; Lee et al., 2010; Recht and Ré, 2011). The factorization-based approach parametrizes each monochromatic data matrix \({\vector{D}^{(i)}}\) as a product of two low-rank factors \(\vector{L}^{(i)} \in \mathbb{C}^{(n_{rx} \cdot n_{sx}) \times k}\) and \(\vector{R}^{(i)} \in \mathbb{C}^{(n_{ry} \cdot n_{sy}) \times k}\) such that, \({\vector{D}^{(i)} = {\vector{L}^{(i)}}{\vector{R}^{(i)}}^{H}}\), where \(k\) represents the rank of the underlying matrix and \(^H\) represents the Hermitian transpose. Note that tensors \(\vector{L}, \vector{R}\) can be formed by concatenating each matrix \({\vector{L}^{(i)}}, {\vector{R}^{(i)}}\), respectively. The optimization scheme can then be carried out using the tensors \(\vector{L}, \vector{R}\) instead of \(\vector{D}\), thereby significantly reducing the size of the decision variable from \(n_{rx} \times n_{ry} \times n_{sx} \times n_{sy} \times n_f\) to \(2k \times n_{rx} \times n_{sx} \times n_f\) when \(k \leq n_{rx} \times n_{sx}\). Following Rennie and Srebro (2005), the sum of the nuclear norm obeys the relationship: \[ \begin{equation*} {\sum_{j}^{n_f}}\|\vector{D}^{(i)}_{j}\|_* \leq {\sum_{j}^{n_f}}\frac{1}{2} \|{\vector{L}^{(i)}_{j}} {\vector{R}^{(i)}_{j}}\|_F^2, \end{equation*} \] where \(\|\cdot\|_F^2\) is the Frobenius norm of the matrix (sum of the squared entries).

Experiments & Results

We test the efficacy of our method by simulating a synthetic 5D data set using the BG Compass velocity model (provided by the BG Group) which is a geologically complex and realistic model. We also quantify the cost savings associated with simultaneous acquisition in terms of an improved spatial-sampling ratio defined as a ratio between the spatial grid interval of observed simultaneous time-jittered acquisition and the spatial grid interval of recovered conventional acquisition. The speed-up in acquisition is measured using the survey-time ratio (STR), proposed by Berkhout (2008), which measures the ratio of time of conventional acquisition and simultaneous acquisition.

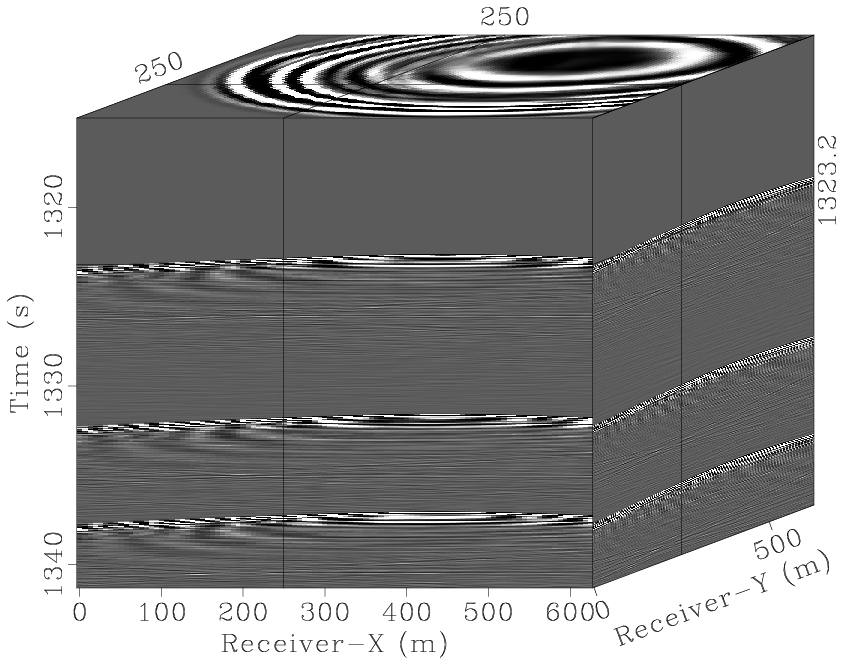

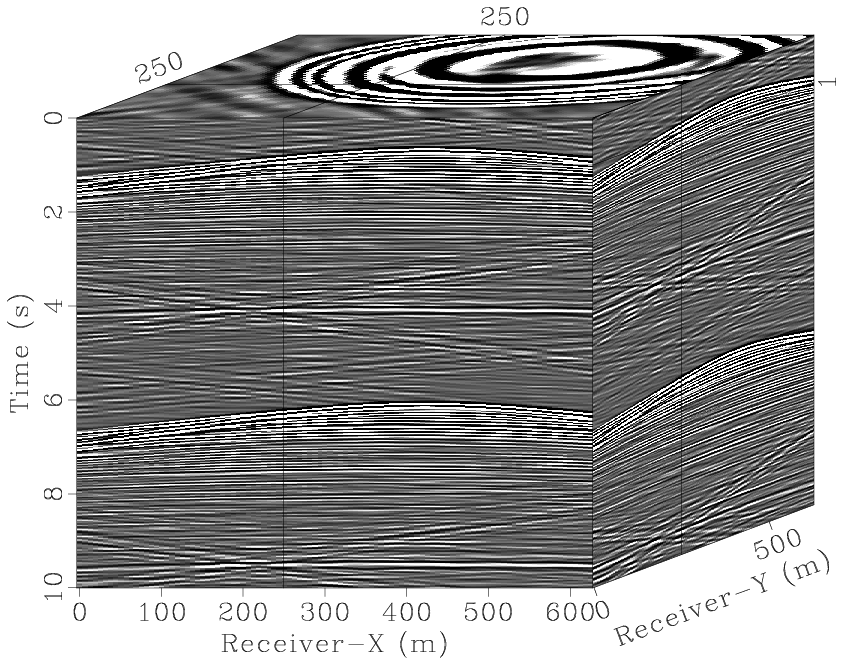

Using a time-stepping finite-difference modelling code provided by Chevron, we simulate a conventional 5D data set of dimensions \(2501 \times 101 \times 101 \times 40 \times 40\) (\(n_t \times n_{rx} \times n_{ry} \times n_{sx} \times n_{sy}\)) over a survey area of approximately \(4\, \mathrm{km} \times 4\, \mathrm{km}\). Conventional time-sampling interval is \(4.0\, \mathrm{ms}\), source- and receiver-sampling interval is \(6.25\, \mathrm{m}\). We use a Ricker wavelet with central frequency of \(15.0\, \mathrm{Hz}\) as source function. Figure 4a shows a conventional common-shot gather. Applying the sampling-transformation operator (\(\mathcal{A}\)) to the conventional data generates approximately \(65\) minutes of 3D continuous time-domain simultaneous seismic data, \(30\) seconds of which is shown in Figure 4b. By virtue of the design of the simultaneous time-jittered acquisition, the simultaneous data volume \(\vector{b}\) is \(4\)-times subsampled compared to conventional acquisition. Consequently, the spatial sampling of recovered data is improved by a factor of \(4\) and the acquisition time is reduced by the same factor.

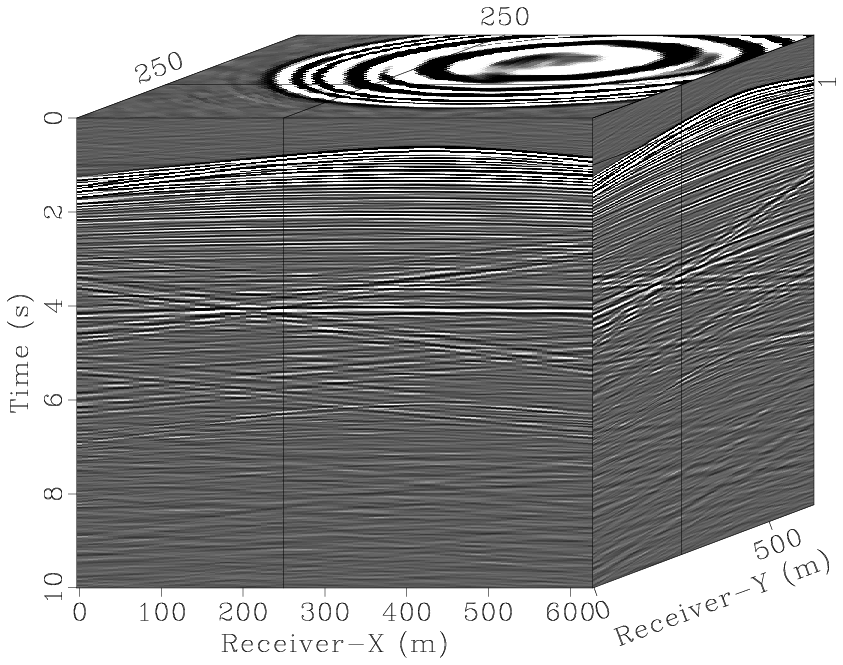

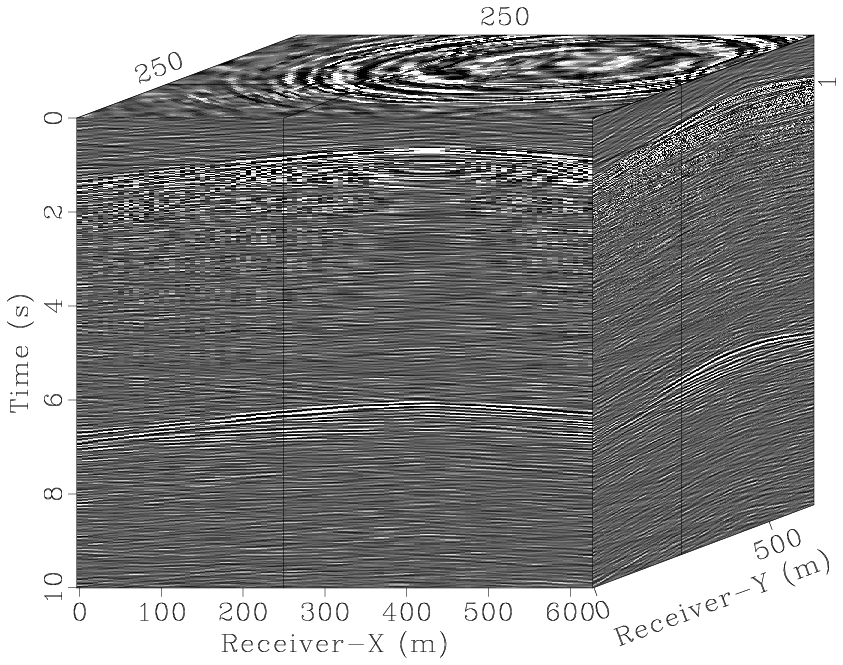

Simply applying the adjoint of the sampling operator \(\mathcal{M}\) to simultaneous data \(\vector{b}\) results in strong interferences from other sources as shown in Figure 4c. Therefore, to recover the interference-free conventional seismic data volume from the simultaneous time-jittered data, we solve the factorization based nuclear-norm minimization formulation. We perform the source separation for a range of rank \(k\) values of the two low-rank factors \(\vector{L}^{(i)}, \vector{R}^{(i)}\) and find that \(k = 100\) gives the best signal-to-noise ratio (SNR) of the recovered conventional data. Figure 4d shows the recovered shot gather, with an SNR of \(20.8\, \mathrm{dB}\), and the corresponding residual is shown in Figure 4e. As illustrated, we are able to separate the shots along with interpolating the data to the finer grid of \(6.25\, \mathrm{m}\). To establish that we loose very small coherent energy during source separation, we intensify the amplitudes of the residual plot by a factor of \(8\) (Figure 4e). The late arriving events, which are often weak in energy, are also separated reasonably well. Computational efficiency of the rank-minimization approach—in terms of the memory storage—in comparison to the curvelet-based sparsity-promoting approach is approximately \(7.2\) when compared with 2D curvelets and \(24\) when compared with 3D curvelets.

Conclusions

We propose a factorization based nuclear-norm minimization formulation for simultaneous source separation and interpolation of 5D seismic data volume. Since the sampling-transformation operator is nonseparable in the simultaneous time-jittered marine acquisition, we formulate the factorization based nuclear-norm minimization problem over the entire temporal-frequency domain, contrary to solving each monochromatic data matrix independently. We show that the proposed methodology is able to separate and interpolate the data to a fine underlying grid reasonably well. The proposed approach is computationally memory efficient in comparison to the curvelet-based sparsity-promoting approach.

Acknowledgements

We would like to thank the BG Group for permission to use the synthetic Compass velocity model, Chevron for providing the 3D time-stepping finite-difference modelling code and Tristan van Leeuwen for valuable discussions. The authors wish to acknowledge the SENAI CIMATEC Supercomputing Center for Industrial Innovation, with support from BG Brasil and the Brazilian Authority for Oil, Gas and Biofuels (ANP), for the provision and operation of computational facilities and the commitment to invest in Research & Development. This work was financially supported in part by the Natural Sciences and Engineering Research Council of Canada Collaborative Research and Development Grant DNOISE II (CDRP J 375142-08). This research was carried out as part of the SINBAD II project with the support of the member organizations of the SINBAD Consortium.

Beasley, C. J., 2008, A new look at marine simultaneous sources: The Leading Edge, 27, 914–917. doi:10.1190/1.2954033

Beasley, C. J., Chambers, R. E., and Jiang, Z., 1998, A new look at simultaneous sources: SEG Technical Program Expanded Abstracts, 17, 133–135. doi:10.1190/1.1820149

Berkhout, A., 2008, Changing the mindset in seismic data acquisition: The Leading Edge, 27, 924–938.

Cheng, J., and Sacchi, M. D., 2013, Separation of simultaneous source data via iterative rank reduction: In SEG technical program expanded abstracts (pp. 88–93). Retrieved from http://dx.doi.org/10.1190/segam2013-1313.1

Hampson, G., Stefani, J., and Herkenhoff, F., 2008, Acquisition using simultaneous sources: The Leading Edge, 27, 918–923. doi:10.1190/1.2954034

Kok, R. de, and Gillespie, D., 2002, A universal simultaneous shooting technique: In. EAGE; Eur. Ass. of Geosc.; Eng., Expanded abstracts.

Kreimer, N., and Sacchi, M. D., 2012, A tensor higher-order singular value decomposition for prestack seismic data noise reduction and interpolation: GEOPHYSICS, 77, V113–V122. doi:10.1190/geo2011-0399.1

Kumar, R., Silva, C. D., Akalin, O., Aravkin, A. Y., Mansour, H., Recht, B., and Herrmann, F. J., 2015a, Efficient matrix completion for seismic data reconstruction: Geophysics, 80, V97–V114. doi:10.1190/geo2014-0369.1

Kumar, R., Wason, H., and Herrmann, F. J., 2015b, Source separation for simultaneous towed-streamer marine acquisition - a compressed sensing approach: Geophysics, 80, WD73–WD88. doi:10.1190/geo2015-0108.1

Lee, J., Recht, B., Salakhutdinov, R., Srebro, N., and Tropp, J., 2010, Practical large-scale optimization for max-norm regularization: In Advances in neural information processing systems, 2010.

Maraschini, M., Dyer, R., Stevens, K., and Bird, D., 2012, Source separation by iterative rank reduction - theory and applications: In 74th eAGE conference and exhibition.

Recht, B., and Ré, C., 2011, Parallel stochastic gradient algorithms for large-scale matrix completion: In Optimization online.

Recht, B., Fazel, M., and Parrilo, P., 2010, Guaranteed minimum rank solutions to linear matrix equations via nuclear norm minimization.: SIAM Review, 52, 471–501.

Rennie, J. D. M., and Srebro, N., 2005, Fast maximum margin matrix factorization for collaborative prediction: In Proceedings of the 22nd international conference on machine learning (pp. 713–719). ACM.

Silva, C. D., and Herrmann, F. J., 2013, Hierarchical Tucker tensor optimization - applications to 4D seismic data interpolation: EAGE annual conference proceedings. doi:10.3997/2214-4609.20130390

Wason, H., and Herrmann, F. J., 2013, Time-jittered ocean bottom seismic acquisition: SEG Technical Program Expanded Abstracts, 32, 1–6. doi:10.1190/segam2013-1391.1