DNOISE III — Exploration Seismology in the Petascale Age

Released to public domain under Creative Commons license type BY.

Copyright (c) 2014 SLIM group @ The University of British Columbia."

Synopsis

This current proposal describes a comprehensive five-year continuation of our research project in dynamic nonlinear optimization for imaging in seismic exploration (DNOISE). DNOISE III—Exploration Seismology in the Petascale Age builds on the proven track record of our multidisciplinary research team that conducts transformative research in the fields of seismic-data acquisition, processing, and wave-equation based inversion. The overarching goals of the DNOISE series of projects can be simply summarized as:

“How to image more deeply and with more detail?” and “How to do more with less data?”

Also, to help overcome the current substantial challenges in the oil and gas industry, we maintain this focus with more specific follow-up questions such as:

“How can we control costs and remove acquisition-related artifacts in 3D (time-lapse) seismic data sets?” and “How can we replace conventional seismic data processing with wave-equation based inversion, control computational costs, assess uncertainties, extract reservoir information and remove sensitivity to starting models?”

To answer these questions, we have assembled an expanded cross-disciplinary research team with backgrounds in scientific computing (SC), machine learning (ML), compressive sensing (CS), hardware design, and computational and observational exploration seismology (ES). With this team, we will continue to drive innovations in ES by utilizing our unparalleled access to high-performance computing (HPC), our expertise and experience in CS and wave-equation based inversion (WEI) and our proven abilities in incorporating our research findings into practical scalable software of our inversion solutions.

DNOISE II: Status Report

Since its inception in 2010, the DNOISE II grant under the guidance of Felix J. Herrmann from the Department of Earth, Ocean, and Atmospheric Sciences (EOAS), Michael Friedlander from Computer Science, and Ozgur Yilmaz from Mathematics has produced 46 peer-reviewed journal publications (plus six more in review), 95 peer-reviewed expanded abstracts for the CSEG, SEG, and EAGE, and 167 presentations at national and international conferences (not including the 145 presentations given during the Seismic Inversion by Next-generation BAsis function Decompositions (SINBAD) Consortium meetings). During the DNOISE II project, 9 graduate students (2 PhD and 7 MSc) have completed their degrees (and 3 more PhD students are expected to graduate first half of 2015). We have had 10 post-doctoral fellows (PDFs) participate in DNOISE II and 4 PDFs continue to be employed. This research project has also employed 6 junior scientific programmers, 11 undergraduate coop students, one senior programmer and a research associate. Below we describe in detail our main successes (and setbacks), and summarize the new research areas that have grown out of DNOISE II, which we would like to pursue as part of DNOISE III. The following summary mirrors the outline of the original DNOISE II CRD proposal.

Compressive acquisition and sparse recovery

Objectives: Design and implementation of new seismic-data acquisition methodologies that reduce costs by exploiting structure in seismic data.

Seismic data collection is becoming more and more challenging because of increased demands for high-quality, long-offset and wide-azimuth data. As part of the DNOISE II project, we adapted recent results from CS techniques to generate innovations in seismic acquisition that in turn reduced costs and allowed us to collect more data. Over the last 5 years, our research team has made significant contributions in various areas of this general theme. Below we summarize some of the key contributions:

Compressive sensing. One of the main aims of the DNOISE II project has been to leverage ideas from CS to establish a new methodology for ES, where the costs of acquisition and processing are no longer dominated by survey area size but by the sparsity of seismic data volumes. DNOISE II research has resulted in several contributions to the theory and practice of CS. One area of focus has been to investigate ways of incorporating prior information into recovery algorithms. In [1], Hassan Mansour developed an algorithm that was based on weighted \(\ell_1\)-norm minimization and used theoretical guarantees for improved sparse recovery, when the approximate locations of significant transform-domain coefficients are known. This approach led to significant improvements in the wavefield recovery problem [2,3]. Our research also showed that prior information can be incorporated into alternative sparse recovery methods. Examples of this work include recovery by non-convex optimization as shown by Navid Ghadermarzy in [4], approximate message passing [5] with an immediate application to seismic interpolation [6], in low-rank matrix recovery [7], and in solving overdetermined systems with sparse solutions using a modified Kaczmarz algorithm [8]. Finally, we examined the synthesis-or-analysis problem that arises in theoretical signal processing in the context of curvelet-based seismic data interpolation methods by sparse optimization described in the work of Tim Lin, [9], and Brock Hargreaves, [10]. We proposed an inversion procedure that exhibits uniform improvement over existing curvelet-based regularization via sparse inversion (CRSI) algorithms by demanding that the procedure only returns physical signals that naturally admit sparse coefficients under canonical curvelet analysis. Also, we have applied curvelet denoising to enhance crustal reflections [11]. This technique is now widely used by Chevron in Canada. Outcome. Fast compressive-sensing based seismic interpolation and denoising that used prior support information.

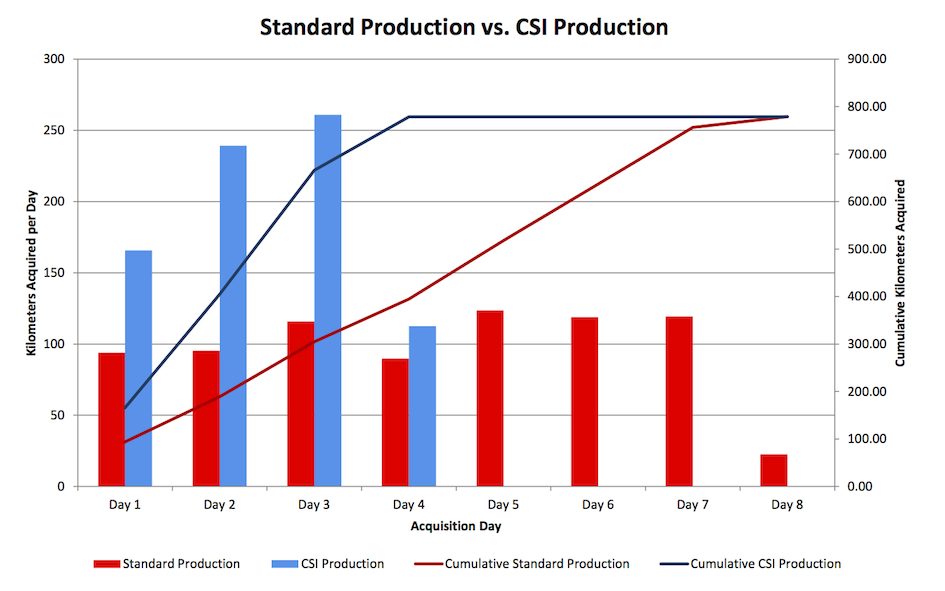

Randomized marine acquisition with time jittering. One of the ideas CS relies on is the use of random sampling as a means of dimension reduction. Motivated by this, we have worked on establishing connections between random time dithering and jittered sampling in space. Specifically, we can recover high-quality seismic data volumes from time-jittered marine acquisition where the average inter-shot time is significantly reduced, leading to cheaper surveys due to fewer overlapping shots. The time-jittered acquisition, in conjunction with the shot separation by curvelet-domain sparsity promotion, allows us to recover high quality data volumes [12–15]. This line of work has been widely recognized by the oil and gas industry. For e.g., ConocoPhilips ran a highly successful field trial on marine acquisition with CS and obtained significant improvements compared to standard production. See Figure 1 where costs of conventional and compressive acquisition are compared. Recently, we have embarked on adapting techniques from distributed CS to time-lapse seismic data sets. We have proposed a radical new approach to processing 4-D seismic that exploits shared information between the base-line and monitor surveys. With this new approach, Felix Oghenekohwo in collaboration with Rajiv Kumar and Haneet Wason was able to shed new light on the fundamental repeatability requirements in time-lapse seismic data [16–19]. We are delighted to report that our new method for randomized marine acquisition has been successfully used in the field for acquisitions with ocean bottom nodes [20]. On another very encouraging note, the industry is pursuing randomized sampling in other settings [20] including simultaneous-source coil sampling [21]. Outcome. Innovations in seismic acquisition design and recovery techniques resulting in significant interest and increasing uptake by industry due to costs being cut by at least a factor of two.

Matrix & tensor completion. Our team also focussed on leveraging low-rank structure in seismic data to solve extremely large data recovery problems. In [7] we introduced a large–scale Singular-Value Decomposition (SVD)-free optimization framework that is robust with respect to outliers and that uses information on the “support”. We used this framework for seismic data interpolation [22] and recently extended it to include regularization so it can be used on “off-the-grid” data [23]. Our findings show that major improvements are possible compared to curvelet-based reconstruction [24]. Furthermore, we also used low-rank structure for source separation [25] with short dither times where curvelet-based techniques typically fail. Aside from working on matrix-completion problems relevant to missing-trace interpolation, we also started a research program extending these ideas to tensor completion problems. Specifically, we have developed the algorithmic components for solving optimization problems in the hierarchical Tucker format, a relatively new decomposition method for representing high dimensional arrays [26]. As a result we are able to solve tensor completion problems, i.e., missing-trace recovery problems on four dimensional frequency slices with matrix factorizations [7,22,25] and optimization on hierarchical Tucker representations [26–28]. Other applications of our matrix completion techniques include lifting methods where non-convex problems are convexified by turning them into semi-definite programs [29]. Ernie Esser applied these methods to the blind deconvolution problem [30], where both the wavelet and the reflectivity are unknown, and to higher-order interferometric inversion [31] where Rongrong Wang extended recent work of Demanet in [32] to the nonlinear case and to include errors on the source and receiver side [31]. Outcome: A scalable framework for matrix and tensor completion with applications to time-jittered marine acquisition and lifting.

Large-scale structure revealing optimization. All of the above approaches depend on access to fast large-scale solvers. DNOISE has made major contributions to the development of large-scale solvers, resulting in SPG\(\ell_1\) [33], one of the fastest software implementations [34,35] of sparsity promoting problems. Brock ported this code into Total SA’s system. A parallel optimized version of this drives many of our applications. When the optimizations are carried out over higher dimensional objects such as matrices and tensors, it is crucial that these optimizations do not rely on carrying out SVDs over the full ambient dimension. As part of DNOISE II, our group continued to spearhead developments of state-of-the-art solvers [7,26]. Under the supervision of Michael Friedlander, Ives Macedo investigated a new generation of convex optimization algorithms for low-rank matrix recovery that do not require explicit factorizations of the matrices. The first step of this research program, developing an understanding of the mathematical underpinnings of this special type of optimization, co-authored with Ting Kei Pong is given in [36]. Proximal-gradient methods form the algorithmic template for almost all methods used in sparse optimization. Gabriel Goh developed proximal-gradient solvers that use tree-based preconditioners, which are structured Hessian approximations built from an underlying graph-representation of the relationship between the variables in the problem. At the same time, PhD student Julie Nutini researched rigorous approaches for seamlessly incorporating conjugate-gradient-like iterations, which are well understood and effective, into the proximal-gradient framework. Outcome. Versatile and structure-promoting solvers for large-scale problems in ES.

Free-surface removal

Objectives: Wave-equation-based mitigation of the free surface by sparse inversion.

During DNOISE II, Tim Lin made significant contributions to the mitigation of free-surface multiples. The impacts of his innovative work on enhancing the Estimation of Primaries by Sparse Inversion (EPSI) technique are manyfold, including a well cited paper [37], various refereed conference papers that include efficient multilevel formulations [38] and a method to mitigate the effects of near-offset gaps [39]. His method has been implemented on sail lines in 3D during an internship with Chevron, proving the scalability of his approach. With Bander Jumah, we have used randomized SVDs in combination with hierarchical matrix representations to reduce the memory use and the matrix-matrix multiplication costs of EPSI [40–42]. With our long-term visitor Joost van der Neut, we extended this wavefield inversion framework to interferometric imaging that led to several presentations and a journal publication [43–45]. Outcome: Industry-ready scalable solutions to surface-related multiple and interferometric redatuming problems.

Compressive modelling for imaging and inversion

Objectives: Design and implementation of efficient wavefield simulators in 2- and 3D.

Solving large-scale time-harmonic Helmholtz systems, given constraints associated with inversion, are extremely challenging problems. The system is indefinite, and we have to deal with many sources (right-hand sides) and many linearizations as part of the inversion. This means that a formulation is needed that has a small memory footprint, small setup cost and a preconditioner that is easy to implement and works for different types of wave physics. To control the computational costs, a formulation is required that reduces the number of sources where we have control over the accuracy to make the simulations suitable for an inversion framework that works with subsets of data (shots) at reduced accuracy. To meet these constraints, Tristan van Leeuwen implemented a 3D simulation framework based on Kaczmarz sweeps [46]. This solver is part of our efficient 3D full-waveform inversion framework with controlled sloppiness [46,47]. As part of his MSc research, Art Petrenko implemented this algorithm on the accelerators of Maxeler [48,49]. Rafael Lago extended our approach by using minimal residual Krylov methods to solve the acoustic time-harmonic wave equation [50]. Rafael also worked on improved discretizations of the Helmholtz system and on more sophisticated preconditioners. We also implemented proper discretizations of the wave equation along with the implementations of gradients, Jacobians, and Hessians that satisfy Taylor expansions of the objectives in 2D, 3D, and for the multiparameter case. These implementations, which include forward solves, are essential components of wave-equation based inversions [51,52]. Finally, we investigated utilizing depth-extrapolation with the full wave equation [53]. Outcome: A practical implementation of a scalable, object-oriented parallellized simulation framework in 2- and 3D for time-harmonic wave equations.

Compressive wave-equation based imaging and inversion

Objectives: Design and implementation of an efficient and robust wave-equation based inversion framework leveraging recent developments in ML, CS, sparse recovery, robust statistics, and optimization.

DNOISE II research has also made significant contributions to “high-frequency” reflection-based imaging that allows us to carry out inversions at greatly reduced costs while removing sensitivity to starting models. Xiang Li worked on sparsity-promoting imaging with CS [54] and approximate message passing [55] techniques that yield an inverted image roughly at the cost of a single reverse-time migration with all data. We consider this a major accomplishment. Ning Tu built on this work and developed a method to image with multiples and source estimation, once again at the cost of roughly one reverse-time migration with all data [56,57]. This effectively removes the computational costs of EPSI and is widely considered as a fundamental breakthrough. Tristan and Rajiv also made significant contributions to wave-equation migration velocity analysis with full-subsurface offset extended image volumes by calculating the action of these volumes on probing vectors [58]. Since it uses random matrix probing, this technique avoids forming these computationally prohibitive extended image volumes explicitly. This method allows us to conduct linearized Amplitude-Versus-Offset analysis (AVO) including geologic dip estimation [59]. Random matrix probings are also used by randomized SVDs and preconditioning [60] of the wave-equation Hessian. Finally, Lina Miao worked on imaging with depth-stepping using randomized hierarchical semi-separable matrices [61]. This approach has advantages over reverse-time migration because it treats turning waves differently [62].

Our team has also made important progress in full-waveform inversion. First, we firmly established randomized source encoding [63] as an instance of stochastic optimization [64,65]. Tristan extended this work to marine [66], using results from batching [67] derived by Michael Friedlander and Mark Schmidt that give conditions for convergence of (convex) optimization problems. This research is essentially based on the “intuitive” premise that data misfit and gradient calculations do not need to be accurate at the beginning of an iterative optimization procedure when the model explains the data poorly. This work was implemented by WesternGeco and several others and has led to four- to five-fold increases in speed of full-waveform inversion making the difference between loss or profit. In our extension to the marine case [66,68], Tristan also introduced a new method for on-the-fly source estimation [69] that formed the basis for his later research with Sasha Aravkin on nuisance-parameter estimation with variable projection [70]. Nuisance parameters include unknown source scaling, weightings or certain statistical parameters, including parameters of the student’s t distribution. Our work on source estimation with variable projection has produced several publications [71], and has been integrated into Total SA’s production full-waveform inversion codes [72].

To make our inversions more stable with respect to outliers, Sasha introduced misfit functions based on this criterion. This work resulted in peer-reviewed conference papers [73–75] and in a generalization of earlier convergence results for batching techniques [76]. While successful, the proposed batching techniques were not constructive. In other words, these techniques did not provide an algorithm to select the appropriate batch sizes (number of shots partaking in the optimization) and accuracy of the wave simulations as the inversion progressed. To overcome this problem, Tristan proposed a practical heuristic approach that was presented at major conferences and that has been communicated to the mathematical and geophysical literature [47,77].

Aside from relying on increasing the batch size to control the errors, Xiang adapted our accelerated sparsity-promoting imaging techniques to speed up computations of Gauss-Newton updates of full-waveform inversion. This included an extension to marine data and was presented at several conferences [78–80] and as a letter to GEOPHYSICS [81]. Xiang applied this method to Chevron’s blind Gulf of Mexico dataset and presented this work [82] at several full-waveform inversion workshops.

While these approaches to full-waveform inversion addressed important problems regarding the computational cost, estimation of nuisance parameters, and robustness to outliers, this inversion technique remains notoriously sensitive to cycle skipping and, as a consequence, to the accuracy of the starting models. This sensitivity severely limits the applicability of this technology since accurate starting models are generally not available. To derive a formulation that is less prone to cycle skipping, Tristan replaced the PDE-constraint of the “all-at-once” methods with a two-norm penalty, instead of eliminating this constraint as in reduced adjoint-state methods [83]. This approach leads to an extension—i.e., an increase of the degrees of freedom—where the unknowns are now the wavefields for each shot and the earth model. While keeping track of these wavefields is computationally not feasible in the “all-at-once” approach, Tristan overcame this problem by using his variable projection technique. In this method, for each shot, wavefields are calculated that fit both the physics (the PDE) and the observed data, followed by a gradient step that minimizes the PDE residual. This method differs from existing approaches—i.e., the “all-at-once” [83] or contrast-source method [84]—because these wavefields can be calculated for each shot independently, avoiding storage of all wavefields. There are strong indications that this approach is less sensitive to starting models while having additional advantages including sparse Hessians and less storage since there is no adjoint wavefield. This work, which we refer to as Wavefield Reconstruction Inversion (WRI), appeared as an express letter [85], is submitted for publication [86], and was presented at several conferences and invited talks at workshops around the world [87,88]. We also filed a patent on this work [89]. Finally, WRI is extended by Bas Peters to the multi-parameter case [52] and by Ernie to include total-variation regularization via scaled projected gradients [90]. We consider this to be groundbreaking because there are clear indications that this formulation is less sensitive to cycle skips. This work will also be presented at the EAGE Distinguished Lecturer Programme 2015 & 2016. Outcome: A framework for wave-equation based inversions that is economically viable and that removes fundamental issues related to outliers, nuisance parameters, and most importantly sensitivity to cycle skips thus relaxing some of the constraints on starting models.

Parallel software environment

Objectives: Development and implementation of a scalable parallel development environment to test and disseminate practical software implementations of our algorithms in 2- and 3D to our industrial partners. Team: Henryk Modzelewsk (senior programmer) and a team of co-op undergraduate students have been responsible for parallel hard/software development and maintenance for the DNOISE II research project.

During DNOISE II, we developed an object-oriented and scalable framework that has put us well ahead of the competition. As a result our software development times are roughly half than those of our competitors while we are able (due the object-oriented code) to develop more sophisticated high- (e.g., convex solvers and linear algebra in parallel Matlab) and low-level (e.g., row projections on Maxeler hardware), within an overarching integrated and verifiable software framework [51]. Aside from enabling our students to concentrate on the real problems at hand, the framework (including parallel SPOT—a linear operator toolbox), data containers, and map-reduce algorithms (based on SWIFT) also allow our researcher to develop numerous parallelized software prototypes. This results in algorithms that are adaptable, easy to understand, and above all scalable. During the DNOISE II project, we developed 25 software releases.

During DNOISE II, our researchers also extensively worked on blind field and synthetic data case studies provided to us by industry. We did this work with Professor Andrew Calvert and Professor Eric Takougang, as well as Professor Mike Warner (Imperial College London) and several members of our research team.

Research dissemination

Aside from presenting our work in 50 journal papers, 100 expanded abstracts, more than 200 presentations at international and our own conferences, we have presented our findings to other research communities (outside geophysics). These latter presentations include prestigious conferences in signal processing and applied mathematics such as International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE Global Conference on Signal and Information Processing (GlobalSIP), IEE Statistical Signal Processing, Signal Processing with Adaptive Sparse Structured Representations (SPARS), International Conference on Sampling Theory and Applications (SampTA) and SPIE Wavelets and Sparsity. Many of these presentations were invited talks and garnered wide-spread recognition of our work amongst a variety of different scientific disciplines.

For more details on our work on seismic data acquisition, processing, modelling, imaging, migration velocity analysis, full-waveform inversion, CS, and optimization, please refer to the Seismic Laboratory for Imaging and Modelling (SLIM) website, our mind map or the most recent progress report.

DNOISE III

Research Objectives

The field of Exploration Seismology (ES) is in the midst of a “perfect storm”. Conventional oil and gas fields are increasingly difficult to explore and produce calling for more complex wave-equation based inversion (WEI) algorithms that require dense long-offset samplings. These requirements result in exponential growth in data volumes and prohibitive demands on computational resources. As part of DNOISE III—Exploration Seismology in the Petascale Age—we will fundamentally rethink and reinvent the field of computational ES to meet these challenges via a truly cross-disciplinary research program that (i) builds on and further strengthens DNOISE’s proven track record in driving innovations in seismic data acquisition, processing, and wave-equation based inversions, such as reverse-time migration, migration velocity analysis, and full-waveform inversion; (ii) continues to leverage developments in mathematics (e.g., CS), large-scale optimization, and ML to optimally handle “Big data” sets; and (iii) addresses fundamental issues related to sampling, non-uniqueness of inversions, mitigation of risk, and integration with reservoir characterization.

To enhance and extend these research goals we will significantly expand our research team and scope of research in the DNOISE III project. Our primary aim is to define a completely new paradigm for computational seismology that is industry-scale proof, to enable 3D seismic experiments in complex geologies and unconventional plays such as shale gas, and to train a future generation of geophysicists by giving them a solid background in modern-day data science and SC. The main research objectives of DNOISE III are listed below and include elements that vary in risk and academic reward. These objectives are designed to solve both current and long-term challenges faced by industry.

I—Seismic data acquisition for wave-equation based inversion. Mainly due to the efforts of the DNOISE series of CRD grants, the oil and gas industry is at a watershed moment of accepting and implementing randomized [20,21] dense full-azimuth long-offset [91] acquisitions for both marine and land areas of exploration. While much progress has been made, fundamental questions remain, including “What is the role of fold (=redundancy) in randomized acquisition?”; “Can we control cost, optimize the sampling, and make the recovery robust with respect to calibration errors?”; and finally “How does randomized sampling affect time-lapse seismic measurements?” To answer these questions we will use a combination of CS and simulation-based acquisition design to produce optimized and physically-realizable acquisition schemes and performance metrics for 3D (time-lapse) seismic acquisition. Outcome: Cost-effective and optimized acquisition technology for WEIs that require large (>100k) channel counts on land and significant numbers (>10k) of cheap autonomous ocean-bottom nodes in marine environments.

II—Resilient wave-equation based inversion technologies. WEIs suffer from acquisition and surface multiples and source/receiver ghosts, excessive computational costs for complicated physics, sensitivity to starting models, and ill-posedness of multi-parameter inversions. Inverse problems in geophysics are often ill-posed, which leads to systems of equations that are ill-conditioned and difficult to solve. We plan to tackle these issues by investigating model-space structure and correlations among model updates as priors, by using techniques from stochastic optimization and batching, and by reformulating WEI using partial differential equation (PDE)-constrained and convex optimization approaches. Outcome: A fast, resilient and scalable wave-equation based inversion methodology for complicated wave physics. This innovative technology will be less sensitive to incomplete data, starting models, ill-posedness, and calibration errors.

III—Technology validation on field datasets. Great progress was made in DNOISE II in the development of the next-generation of seismic data acquisition, processing, and inversion technology. Validation of these technologies on realistic datasets [82] remains a crucial step towards the use of our technology by industry. As part of DNOISE III, major effort will be devoted towards the creation of versatile, automatic workflows and validation of our technology on realistic 3D blind synthetic and field data sets. Outcome: A framework including practical workflows that validates DNOISE’s technology on industry-scale datasets.

IV—Risk mitigation. ES in industry is mostly aimed at mitigating risk. Therefore these industries are risk averse and slow in the adoption of innovative techniques such as randomized acquisition, processing and inversion algorithms that are part of this research project. This relatively slow acceptance is partly due to the lack of “error bars” on our inversion results. Quantification of risk is therefore instrumental for major industrial uptake of our technology. Outcome. CS and wave-equation based inversion methodologies that include uncertainty quantification.

V—Wave-equation based inversion meets reservoir characterization. Obtaining accurate inversions for elastic properties is only a first step towards understanding the complexities of both conventional and unconventional oil and gas reservoirs. Questions such as “Where to drill?” and “How to produce?” are typically not addressed. We plan to address these questions by combining a target-oriented inversion approach with techniques from deep learning, which models high-level abstractions in data with multiple nonlinear transformations. Outcome. A targeted wave-equation based information retrieval technology that is capable of mapping the lithology and time-lapse changes in the reservoir associated with oil and gas production.

VI—Randomized computations and data handling. WEI exhibits—by virtue of the independence of the different (monochromatic) source experiments—a “map-reduce” structure that can be exploited by randomized algorithms. While this separable structure imposes a natural way to parallelize and manage data flows, the gradient calculations themselves are expensive and exceedingly large in number. In addition, the gradient calculations must be synchronized and of similar accuracy for each iteration. By using recent developments in stochastic optimization, we will relax these requirements thus allowing new approaches for parallelization and data handling. Outcome. A scalable computational inversion framework driven by gradient calculations that do not need to be synchronized, which works with small subsets of source experiments, and can vary in accuracy and can be decentralized.

In summary. By integrating these objectives into an overarching project, DNOISE III will ultimately provide scalable acquisition, processing, inversion, and classification methodologies that overcome the main challenges of modern-day ES. Our combination of breakthroughs in mathematics, data science and SC into a practical, computationally feasible, and scalable framework will enable our industrial partners to work with the massive seismic data volumes prevalent in this Petascale Age.

Research approach

The broadening of our research program into several key areas allows us to sustain our leading role in replacing the conventional seismic processing paradigm, where data is subjected to a series of sometimes ad hoc sequential operations, with a new inversion paradigm. By adapting breakthroughs in mathematics, theoretical computer science, and SC, acquisition and computational costs are better controlled, sensitivity to starting models is reduced, and risks are accurately assessed with our novel innovative methodology. Another essential requirement for the success of this research project will be our ability to develop and validate our technology on field data sets using our own computational resources and a $10M (17k CPU core) high-performance computing (HPC) facility in Brazil made available to us as part of the International Inversion Initiative (III). Having access to this world-class HPC facility puts our group in a unique position to make fundamental advances in ES and will allow our group to train students to be the future leaders in geophysics and data science, in industry and academia.

Research team. Our core team includes 4 senior tenured and 3 first-year tenure-track faculty members from the UBC-EOAS (PI, Felix J. Herrmann), SFU Department of Earth Sciences Andrew Calvert, UBC Mathematics (Ozgur Yilmaz and Yaniv Plan), UBC Computer Science (Chen Greif and Mark Schmidt), UBC Department of Electrical and Computer Engineering (Sudip Shekhar), and the Department of Mathematical and Industrial Engineering at Ecole Polytechnique de Montreal (Dominique Orban). This diverse team with experts in theoretical and observational ES (the PI and Andrew Calvert), CS (Ozgur Yilmaz and Yaniv Plan), SC and optimization (Chen Greif and Dominique Orban), ML (Mark Schmidt), and integrated circuits (Sudip Shekhar) covers all areas essential to ES that range from acquisition design, including hardware for the next-generation of field equipment, to modern tools from numerical linear algebra designed to handle the extremely large, expensive to evaluate, and data intensive problems in computational and observational ES. The team contains areas of expertise, which sufficiently overlap to accommodate changes in the team or interruptions because of leaves.

Our diverse research also allows us to tackle a wide variety of problems and gives the students access to faculty with highly complementary research skills and backgrounds. Despite varied research foci, we have built a tight-knit different, yet cross-disciplinary research team also including (under)graduate students, post-doctoral fellows (PDFs), scientific personnel, and local and international collaborators. Some of these additional co-investigators (co-Is) include Professor Rachel Kuske, UBC Mathematics, Professor Ben Recht (UC Berkeley), Dr. Tristan van Leeuwen (Utrecht University), Dr. Dirk-Jan Verschuur (Delft University of Technology), Dr. Sasha Aravkin and Ewout van den Berg (IBM Watson), Dr. Hassan Mansour (Mitsubishi Research), Professor Rayan Saab (UCSD), Dr. Gerard Gorman and Professor Mike Warner (Imperial College London, (ICL)), and Professor Michael Friedlander (UC Davis). As part of the International Inversion Initiative (III), we have also entered into formal collaborations with Professor Mike Warner’s group at ICL.

Managing a large, cross-disciplinary team is always a challenge. Our research collaboration epouses Alan Hastings suggestion in “Key to a Fruitful Biological/Mathematical Collaboration” (SIAM news, 2014)

“These collaborations succeed only when there is a common language, which means that participants must work to learn as much as possible about all fields involved.”

We have successfully overcome this challenge, and will continue to do so, by organizing a number of DNOISE III related activities including weekly seminars, weekly field data meetings, bi-weekly software development meetings, and regular meetings amongst the PIs once per term. The co-PIs in charge of the main research activities will meet at least three times per term with their teams. Also, co-advising students and the fostering of team-member collaborations— e.g. by teaming up PDFs with graduate students—has also proven to be highly effective. In our previous DNOISE collaborations, these management and training approaches have resulted in a world-class research program that is widely recognized for its scientific contributions, uptake of its innovations, a strong journal publications record (a total of 65 journal papers and 150 conference papers), extensive international collaborations and excellent training of highly qualified personnel (HQP) (exceeding 60). These success have resulted in a total of $3.2M in cash support from now 14 industrial partners and in $378k in in-kind contributions training a total of 60 HQP.

Our weekly meetings, which involve all DNOISE team members, have particularly contributed towards the development of a common language and generated numerous cross fertilizations between research areas. (See our mind map for the connections.) These synergies have occurred for the PIs of the first DNOISE grants and among other faculty collaborators, as demonstrated by the expansion of our research team. These collaborations have resulted in an increasing stream of publications while engaging our students with a wide range of cross-disciplinary researchers.

Research management. Effectively leading and synthesizing a large varied research project with many moving parts—including international collaborations, organization of industry consortium meetings attended by all the CRD participants, working with industry, coordinating a large research team, delivering on contracts and software releases—is critical for realizing the true potential of the proposed innovations. To support the team so that they can deliver on these goals, this proposal budget includes (aside from existing part-time program support and several IT, web, and scientific programming support staff) a project-management and grant-tenure position. This project-management person will assist with (i) schedule set up and work scopes, so DNOISE III deadlines and milestones are met; (ii) producing annual DNOISE III progress reports in tandem with project leaders; (iii) research dissemination via the SLIM website; and (iv) coordination of the SINBAD consortium meetings that bring in our industrial partners. The grant-tenure hire will assist with general student and post-doc supervision and with coordinating our industrial contacts. We are currently negotiating with the UBC administration to create this grant-tenure position whereby UBC will contribute to financial support for the position in return for the teaching and administrative services rendered to the EOAS Department.

Deliverables. In addition to disseminating our research results through refereed journal publications, refereed expanded conference presentations and papers, technical reports and annual meetings with our industrial partners, we will prepare frequent software releases and conduct trials on 3D field data sets. These activities will involve all participants in the CRD in a variety of researcher subsets. Our software releases will be documented and distributed via GitHub, a professional code repository, or via conventional means.

Detailed Proposal

In the following, the action items and project leaders are bold-face numbered and appear on the Activity Schedule of Form 101 and in the Detailed Activity Schedule included in the Appendix of the Budget Justification, which also includes a Gantt chart with timelines for the HQP.

I—Seismic data acquisition for wave-equation based inversion

Although the DNOISE I and II projects have made significant progress possible in the design and implementation of randomized sampling and structure-promoting (e.g. transform-domain sparsity) recovery techniques, many fundamental and practical challenges remain, including convincing risk-averse management of the “optimality” of the survey design while being cognizant of practical limitations and sampling requirements of 3D WEI. Key questions that need to be addressed to further support that adoption of the principles of CS [92–95] include: “How do noise and calibration errors affect randomized acquisition and wavefield recovery?”; “What is the impact of randomized acquisition on time-lapse seismic measurements?”; and “Why random sampling?”. To answer these questions, we propose to work on the following topics:

I(i)—Randomized acquisition schemes. I(ia) We will build on conventional structure-promoting recovery techniques with transform-domain sparsity [3] and on the more recently developed matrix- [7,22,96,97] and tensor completions [26,27,60,98,99]. These include recovery from missing traces, short- [25] and long-time [13] jittered continuous marine acquisitions for towed arrays or ocean-bottom nodes (OBN) where single-vessel periodic firing-time recordings are replaced by (multi-vessel) continuous recordings with randomized firing times. In these approaches, coherent subsampling-related artifacts such as aliases and source-crosstalk are shaped into incoherent structure, breaking coherent interference noise. Incoherent noise is favored by convex optimization because these schemes penalize incoherent noise by restoring structure. I(ib) We will investigate the effect of ambient noise as a function of the “source fold”—i.e., the number of sources partaking in simultaneous source experiments. I(ic) We will analyze the performance of our recovery algorithms when including regularization necessary for datasets acquired on unstructured (irregular) grids [23,100]. I(id) We will continue to look for structure revealing representations via: permutations [101], mid-point offset transformations [7,22], Hierarchical Semi-Separable (HSS) matrix [102] and Hierarchical Tucker Tensor (HTT) formats. Also, we will investigate structure promoting optimizations including SVD-free matrix factorizations [7,22] and manifold optimization in the HTT format [26,101]. Our approaches differ significantly from existing Cadzow [103,104] and alternating tensor optimization techniques [98] because our methods are SVD-free and work on either complete data or on multi-level subsets of data as in HSS matrix and HTT formats. Finally, I(ie), we plan to theoretically analyze randomized time-lapse acquisitions [16–18], extending theoretical results from distributed CS [105].

I(ii)—Performance metrics. “Optimal” acquisition design hinges on several key factors that include economics, practicality, theoretical and actual recovery quality, speed, noise sensitivity, and perceived risks. As part of this research project, we want to investigate I(iia) a number of quantitative performance measures that will allow us to compare different acquisition designs and assess the recovery quality in several domains. We also will I(iib) compare the performance of randomized acquisitions that make few assumptions to more data-adaptive sampling methods such as blue-noise (jitter, [23,106]) importance sampling [107] and experimental design [108,109].

I(iii)—Simulation-based acquisition design. While CS gives fundamental insights, measuring and optimizing its performance—e.g., via “brute force” running different realizations and choosing the best performing—in realistic settings remains challenging. Therefore, we will supplement our efforts under I(i) (see above) by conducting acquisition simulations on realistic synthetic models. For 3D (time-lapse) seismic acquisition, this is a computationally expensive, however with our access to high-performance computing infrastructure we will be able to carry out experiments to: I(iiia) demonstrate the validity of different randomized acquisitions and I(iiib) optimize acquisitions for certain geological settings including time-lapse seismic data. These research projects require highly specialized and challenging simulations and performance metrics (under I(ii)) for different inversion modalities—e.g., full-waveform inversion (FWI) and reverse-time migration (RTM) differ in requirements and quality control.

I(iv)—Survey design principles. We will combine results from I(i–iii) and derive I(iva) feasible, and field realizable, 3D (time-lapse) acquisition schemes that balance cost, sampling density for marine [13,25], and degree of repeatability in time-lapse seismic data sets [16–18]. These principles will include qualitative “Do’s and Don’t’s”, and quantitative constraints (e.g., “If you want this error sample this is the recommended sampling density”). The research projects will strongly encourage our industry partners to adopt our sampling technology. In particular, we will focus our efforts on developing I(ivb) economical acquisition scenarios that leverage marine acquisition with large numbers of inexpensive OBNs or vertical arrays and on land with high-channel count wireless systems. We will also further enhance our I(ivc) time-lapse acquisition approach by explicitly exploring commonalities among different vintages [16–18].

I(v)—Calibration. Structure-promoting recovery techniques depend critically on accurate modelling of the acquisition (e.g., small multiplicative phase or coupling errors in sampling matrices) can be detrimental to structure-promoting recovery. Tantamount to recent successes in the increasing productivity of acquisition with CS [20], these calibration issues can be overcome but at an economic price. Requiring expensive, and technically difficult to achieve, calibrations during acquisition could prevent our method from reaching its true economic potential. To better understand what level of calibration is necessary and to make our acquisition schemes immune to certain acquisition errors, we will first I(va) study the effects of realistic multiplicative errors, such as timing errors in jittered marine. Next I(vb), we will incorporate lifting techniques into our recovery algorithms [110]. These lifting techniques are equivalent to reformulating multiplicative non-convex problems, such as “blind deconvolution” [110] or “phase retrieval” [29], into convex problems that do not have local minima. However, these approaches are quadratic in the unknowns and therefore computationally very challenging. When successful, these techniques will allow us to estimate and then compensate for certain multiplicative calibration errors such as coupling issues in geophones.

I(vi)—Surface-related multiples. Aside from making seismic data acquisition more cost effective and robust with respect to calibration errors and noise, we will improve the recovery from incomplete data by including the physics of the free surface. By including the multiple-prediction operator in the recovery, we will investigate using the bounce points at the surface as secondary sources. For 3D seismic data, this will require on-the-fly I(via) interpolations of receivers with HSS matrices (as in I(id) above, [42]); I(vib) infill of missing near offsets [39]; and implementation of computationally efficient multilevel Estimation of Primaries by Sparse Inversion [37,38] in 3D. I(vic) In addition to providing additional information, inclusion of the physics of surface-related multiples in our mathematical formulations [94] may give us the possibility to solve the blind source estimation problem theoretically for problems that involve the free surface. Accomplishing a solution to “blind deconvolution problems with feedback” will be a major breakthrough, providing a thorough justification of source-estimation techniques proposed by our group.

I(vii)—Next-generation (wireless) hardware. To meet the demands of modern-day WEI technology, a new sampling paradigm is needed. While projects summarized in I(i)—I(vi) will be important steps, a similar effort is required in the development of the next-generation OBNs and wireless land systems. I(viia) We will design hardware that exploits our knowledge of CS, quantization, and large-scale optimization to create techniques to limit communication, increase storage capacity of autonomous devices, and to improve battery life of field equipment. I(viib) We also will continue to investigate “sign bit” seismic acquisition [111] using recent work on 1-bit matrix completion [112], which could have a major impact on how wavefields are discretized since it would massively reduce data storage and cost of field equipment.

In summary: With continuing track record consisting of contributions to various aspects of CS–—ranging from theory development to the design of economic and practical acquisitions, and the implementation of scalable wavefield recovery technology based on large-scale solvers—we remain to be world leaders in ES, improving upon current and often ad-hoc solutions to data-acquisition challenges. Project leaders. Ozgur (Co_PI); Felix; Yaniv (Co-PI); Sudip (Co-Pi, (viia),(viib)). Project team. Navid Ghadermarzy (PhD, I(ia),I(ib) ); Rajiv (PhD,I(ia),I(id)); Curt da Silva (PhD,I(ia),I(id) ); Haneet (PhD,I(ia);I(ib),I(iiia),I(iva),I(ivb)); Oscar Lopez (PhD,I(ia);I(ic)); Felix O. (PhD,I(ie),I(iiia),I(iiib),I(iva),I(ivc)); Philipp Witte (PhD,I(ie),I(iiia),I(iiib)); new PDF 1 (I(iia),I(iib),I(iiia),I(iiib),I(iva),I(ivb)); new PDF 2 (I(iiib)), Rong (PDF,I(va),I(vb);I(vic)), Ernie (PDF,I(ia),I(vb),I(va),I(vb)); Mathias Louboutin (PhD, I(via),I(vib)), New PhD 2 (I(viib)).

II—Resilient wave-equation based inversion technologies

Wave-equation based inversions such as linear reverse-time migration (RTM), nonlinear wave-equation migration velocity analysis (WEMVA) and full-waveform inversion (FWI) have become the main inversion modalities utilized by industry with the advent of fast compute. While these types of inversions have important advantages, especially in 3D, major difficulties still exist regarding exorbitant demands on sampling and computations, non-uniqueness, and the lack of versatile automatic workflows. Albeit somewhat arbitrary — the boundaries between these inversion modalities are blurring — we separate our efforts into “high-frequency” reflection and “low-frequency” turning wave inversions:

II(i) “High-frequency” reflection-driven inversions. In many ways reflection-based inversions constitute the “old” imaging and velocity analysis techniques, which are prone to adverse effects of acquisition footprints, surface-related multiples, unknown sources, and sensitivity to timing errors. When given accurate velocity models and densely-sampled data, surface-related multiples and primaries can be jointly imaged via randomized sparsity-promoting optimization that produces artifact-free, true-amplitude images and source estimates in 2D at the cost of roughly one RTM with all the data [57]. II(ia) We will extend this groundbreaking work to 3D; II(ib) incorporate multiples into WEMVA with randomized probings [58]; and II(ic) study how surface-related multiples improve nonlinear migration—i.e., FWI with accurate starting models. To overcome missing near-offsets, we will incorporate II(id) near-offset predictions [39] and on-the-fly interpolations. In all cases, we will use the wave-equation itself to carry out the otherwise prohibitively expensive, dense matrix-matrix multiplies that undergird EPSI [37]. II(ie) We will use this linearized inversion methodology to explore common information among (4D) time-lapse images, to improve their image quality [19]. II(if) Remove timing errors by extending Reference 32’s interferometric imaging to higher order as in recent work by Rong, [31].

II(ii)—Turning-wave and reflection-driven inversions. Directly inverting for the earth parameters, given adequately-sampled seismic data, is in many ways the “holy grail” since this would potentially give us access to elastic properties given sufficient computational resources and accurate starting models. Unfortunately, neither is available. II(iia) To reduce computational requirements, we will utilize a frugal FWI approach [47,82], which uses data and numerical accuracy only as needed, thereby reducing computing and data demands. During FWI we answer the question “How to maximize progress towards the solution given a limited computational budget—i.e. limited passes through the data and limited accuracy?”. We utilize our Wavefield Reconstruction Inversion (WRI)[52,85,87,88] to avoid cycle skips due to inaccurate starting models. We avoid these local minima by extending the search space. Contrary to recently proposed extensions by Symes [113] and Biondi [114], who make velocity models subsurface-offset or time-lag dependent, and by Warner [115], who introduced a matched filter in Adaptive Waveform Inversion, we derive our extension directly from the full-space “all-at-once” [83] approach. Instead of eliminating the wave-equation constraint [83,116], WRI involves a non-convex alternating optimization via variable projections where wavefields that fit both the wave equation and the observed data are solved first, followed by an update on the model parameters that minimizes the “PDE misfit” assuring adherence to the physics. Compared to the full-space “all-at-once” [83] and “contrast-source” [117] methods, no storage and updates are necessary, which is a major advantage. II(iib) We will continue to develop, extend to 3D and evaluate WRI compared to other extension methods, and II(iic) exploit its special structure—e.g., its sparse Gauss-Newton Hessian is highly beneficial to multi-parameter WRI [52] and uncertainty quantification (see Risk Mitigation below). II(iid) While there are strong indications that WRI is less prone to cycle skips there is no guarantee it arrives at the global minimum. Motivated by recent breakthroughs in “blind deconvolution” [110] and “phase retrieval” [29], we will explore the bi-linear structure of WRI in an alternating non-convex optimization procedure that includes the computation of starting models directly from the data that lead to the global minimum [118]. II(iie) Following [Rong] [31], we will also exploit high-order interferometric FWI, which is another instance of lifting. II(iif) Finally, we plan to extend our work on inverting time-lapse data [19] to nonlinear FWI.

II(iii)—Mathematical techniques. WEI is challenging because it calls for various sophisticated techniques that include: II(iiia) Implementation of both frequency and time-domain “simulations for inversion” solvers that have low setup costs, controlled accuracy, and limited memory imprint. This work requires preconditioning for time-harmonic Helmholtz systems with conjugate gradients [47] or residuals [50], and of preconditioners with shifted Laplacians [119–121]. In addition, we will compare time-stepping methods to time-harmonic approaches extending recent work by Knibbe [121] to FWI. To further reduce computational costs, we will also control the accuracy of forward and adjoint solves. Finally, we will evaluate recently proposed model reductions [122] and multi-frequency simulations with shifts [123] and investigate possibilities using randomized sampling techniques in checkpointing [124] to limit storage and re-compute costs of time-stepping. II(iiib) WRI works well in 2D but its extension to 3D is arduous since it involves data-augmented wave solves. Aside from having a PDE block, these systems have data blocks that make them harder to solve. Possible solutions we will include preconditioning with incomplete lower-upper (LU) factors that call for efficient direct and iterative solvers [125–127]; deflation [128,129] to remove outlying eigenvalues, randomized dimensionality reduction [130]; and alternative saddle-point optimization formulations such as augmented Lagrangians [131,132] and quasi-definite systems [133]. II(iiic) Our WEI implementations are based on applying the chain rule to compute gradients that are consistent with the discretization of the wave equation (PDE), verifiable and suitable for second-order schemes such as quasi Newton [134]. However, these valuable properties are exploited at the expense of certain benefits of continuous formulations. We want to know “When it is best to discretize”. II(iiid) Inverse problems benefit from prior information. Therefore, we will incorporate regularization into our 3D WEIs via: modified Gauss-Newton with \(\ell_1\) for conventional FWI with dense randomized Jacobians [135]; via a Gauss-Newton method based on quadratic approximations and scaled projected gradients for WRI [90]; and projected quasi-Newton with randomization [47,67] for FWI and WRI [52]. Specifically, we will study projections on convex sets, including box constraints, \(\ell_1\) and total-variation norms, as well as other, possibly non-convex, regularizations. II(iiie) \(\ell_2\)-norm misfits are ill-equipped to handle modelling errors and correlations amongst different (monochromatic) source experiments. To overcome this shortcoming, we will derive formulations that capitalize on student t misfits [76] and factorized nuclear-norm minimization [7]. II(iiif) Regularizations call for parameter selections and we plan to make these selections void of user input by using properties of Pareto trade-off or “L” curves [76] and nuisance parameter estimation [70].

In summary. During DNOISE II, we have firmly established our research team as one of the leading academic groups in computational ES with expertise in a wide range of topics in wave-equation based inversion (WEI). While perhaps ambitious, our research program includes concrete plans to control computational costs and non-uniqueness. If our approaches indeed prove successful—i.e., we can invert for the earth properties from more or less arbitrary starting models and adequate data — the question arises “Should we recover fully sampled data first, followed by wave-equation based inversion, or should we directly invert randomly subsampled data?”. We ask this question because acquisition of fully sampled data will likely remain elusive because of physical constraints. We therefore will make a concerted effort to answer this fundamental question [94] as part of this grant. Project leaders. Felix, Chen (Co-pi), Tristan (Co-I), Mark (Co-PI) and Dominique (Co-PI). Project team. Mathias (PhD, II(ia),II(ic),II(id)); Rajiv] (PhD, II(ib),II(iiie)), Felix O. (PhD,II(ie),II(iif)); Rong (PDF,II(if),II(iid),II(iie)); Ernie (PDF,II(ia), II(if),II(iid),II(iiid),II(iiie)); New PDF 2 (II(iia)) Bas Peters (PhD,II(iib),II(iic),II(iiib),II(iiid)); Mengmeng Yan (PhD,II(iic)); Zhilong Fang (PhD,II(iic)); Rafael (PDF,II(iiia)); new PhD 3 (II(iiia),II(iiib)); New PDF 3 (II(iiic),II(iiie),ii(iiif)).

III—Technology validation on (3D) field datasets

The success of inverting seismic data for earth parameters depends on the proper conditioning of the data prior and/or or during the inversion, proper parameter settings and “computational steering” carried out during the inversions [136]. Given our team’s access to large computational resources both at UBC and in Brazil, as part of our partnership in the International Inversion Initiative (III), we will develop, preferably automatic, workflows on (blind) synthetic and field datasets representative of different geological settings. Specifically, our research projects in this research area include:

III(i)—Simulation-based verification of wave-equation based inversion. WEI, WEMVA, and FWI in particular, are not yet mature “production ready” technologies and suffer from parameter sensitivities, non-uniqueness, and extremely high computational costs especially when including elasticity, realistic velocity heterogeneity, and fracture related anisotropy. During DNOISE II, we developed a number of core technologies that were designed to reduce the computational costs [76,82], automatically estimate parameter settings [70], and regularize the inversion problem [82,90,137]. III(ia) While initial tests on mostly 2D data are encouraging, these techniques have not yet been tested or fully implemented in 3D, made anisotropic, and applied to field data. III(ib) Also our time-harmonic FWI framework has not been compared to other implementations, such as those developed by Imperial College London [138], another group involved in the III project in Brazil. III(ic) To gain more insight into the performance of DNOISE’s technology in 3D and how it compares with other technologies, we will pursue a series of industry-scale, simulation-based FWI studies where we have control over the model complexity and where we know the answer. For example, we will evaluate how “close” the starting model must be to the final model, particularly when using new approaches that are less sensitive to cycle-skipping. III(id) With the experience obtained in these controlled experiments, we will be a in good position to tackle problems on field data.

III(ii)—Automatic parameter selection. The success of WEI often critically depends on certain unknown parameters such as unknown source scalings, structure-inducing regularization parameters and noise levels. While the latter are relatively well understood for linear problems [76], the estimation of these parameters for nonlinear problems is typically more challenging. While nonlinear WEI problems will always require a certain level of interactive “trial and error” testing of parameters, we managed to automate a number of these parameter-setting exercises. We have also developed computationally efficient WEI techniques, based on certain automatic heuristics that have not yet been thoroughly verified in 3D [47]. III(iia) To get better insight into nuisance-parameter [70] and efficient WEI [47], we will validate our nuisance estimation for source and III(iib) other nuisance parameters on 3D FWI for carefully chosen synthetic models. III(iic) Likewise, we will test 3D imaging with multiples [57], III(iid) compare frugal Quasi-Newton [47] and efficient modified Gauss-Newton [90,137] techniques, which work with randomized subsets of data to FWI with all data, and III(iie) extend the method of variable projection to other nuisance parameters such as weighting and different types of (non-convex) misfit functions, including the student t misfit function or (spectral) matrix norms.

III(iii)—Wave-equation based inversion on field data. The success of applying WEI to field data depends on a number of key factors that include being able to handle unmodelled phases and viscoelastic amplitude effects. A number of approaches will be developed such as III(iiia) including more wave physics, III(iiib) removing unmodelled phases, e.g., via (transform-domain) masking, by using III(iiic) robust misfit functions that are insensitive to outliers [75], or by III(iiid) appropriate regularization. To date, most DNOISE WEI work has focused on marine field datasets, and this work is directly applicable to continuing exploration and development offshore eastern Canada. WEI has the potential to identify exploration drilling objectives that cannot be interpreted from conventionally processed seismic surveys (e.g., smaller reservoirs). With quantitative estimates of seismic parameters from WEI, including time-lapse surveys, improved reservoir characterization and optimized exploitation is possible, particularly where production methods that modify elastic parameters, such as enhanced recovery or hydraulic fracturing, are employed. In some areas, for example the foothills of the western Canadian sedimentary basin, onshore exploration can be limited by reflection data quality, but WEI of transmitted phases provides a complementary approach for deriving structural information given that sufficiently long offsets and low frequencies are recorded. Our research views field data acquisition, data inversion, and interpretation, as an integrated process, and one result of this project is likely to be specific suggestions for modified future field acquisition that will enable practical WEI. We will seek Canadian datasets with limited confidentiality from our industry partners, preferably from the east coast, to contribute to the development of our computationally intensive, field-oriented WEI methods. The availability of large-scale computing infrastructure will allow us to derive results from these field data that cannot be achieved by other academic researchers.

In summary. By having access to virtually unparalleled computational resources, we will be in the position to thoroughly evaluate wave-equation based inversion technology on industry-scale synthetic and field data. This evaluation will lead to a maturing of the technology and practical workflows. Project leaders. Andrew (Co-PI) and Felix. Project team. Zhilong Fang (III(ia),III(ic),III(iia),III(iib)), Mengmeng Yan (PhD,III(ib),III(ic)); Shashin Sharan (PhD,III(ic),III(id),III(iid)); new MSc (III(iiia)); Mathias (PDF,III(iic)); Rafael (PDF,III(iie)); New PhD 10 (III(iiib);III(iiic);III(iiid)); .

IV—Risk mitigation

The above research projects give us a solid foundation for establishing WEI technologies. While WEI will undoubtedly yield estimates of earth properties of unprecedented quality and resolution, these results will only be of limited value if they do not include estimates of uncertainty to help industry mitigate risks in the exploration and production (E&P) of hydrocarbons. Determining quantitative assessments of risk, also known as uncertainty quantification (UQ)[139–141], is for large-dimensional problems notoriously difficult because of the excessive computational demands and the mathematical structure of CS and wave-equation based inversion (WEI). To study these difficult problems we will work on:

IV(i)—Discrete Bayesian techniques. There is relatively little known regarding the UQ of individual components of structure promoting CS recoveries [142]. For e.g., questions such as “What is the error bar for this particular recovered curvelet coefficient?” are virtually unanswered for compressible signals. IV(ia) Extension of model selection techniques to compressible signals including UQ estimates in the physical domain. IV(ib) Techniques from belief propagation [143,144] have connections to Bayesian inference and may offer new ways to estimate UQ of CS. For more general inverse problems, including FWI, more is known about estimating uncertainties and people have proposed Monte-Carlo Markov Chains (MCMC) [139–141]. However, these techniques are prohibitively expensive for realistic problems because of slow convergence. Unfortunately, exploiting low-rank structure of the Hessian [140] is ineffective in ES where Hessians are “full” rank. We propose to work on two methods in this area. IV(ic) For high frequencies the GN Hessian can be modelled as a pseudodifferential operator, which can be well approximated by matrix probing [28,60]. IV(id) We will exploit the property that the GN Hessian of waveform reconstruction inversion (WRI) is a sparse matrix.

(ii) Bayesian UQ for WRI. With model and data sizes in 3D seismic problems exceeding \(10^9\) and \(10^{15}\), respectively, discrete formulations for UQ become computationally prohibitive and basic implementations of MCMC will not work. For this reason, we will take ideas from variable projection [70] in IV(iia) alternating optimization with variable projections in general and IV(iib) for WRI [52,85,87,88] specifically by combining these results with recent work on fast MCMC for functions [145].

In summary. Having access to uncertainty determinations for large-scale geophysical inversions will greatly facilitate the uptake of DNOISE’s technology by industry. Project leaders. Felix, Yaniv (Co-PI) and Rachel Kuske (Co-I). Project team. New PhD 5 [IV(ib),IV(ib)]; Curt da Silva (PhD,IV(ib),IV(iia)); Zhilong Fang (PhD,IV(ib),IV(iib)); Chia Lee (PDF,IV(iia),IV(iib))

V—Wave-equation based inversion meets reservoir characterization

E&P decisions by the oil and gas industry are not driven by estimations of elastic properties alone. Instead, these decisions are based on estimates of reservoir properties and their associated uncertainty. While seismic ES has, in principle, the capability to map lateral variations of these properties, the acceptance by reservoir engineers, who prefer to work with a variety of borehole and production data, has been slow because of our inability to include UQ and to translate seismic properties to relevant reservoir properties. As part of DNOISE III, we will change this with projects focussed on:

V(i)—Target-oriented inversion and characterization. V(ia) We will investigate possible ways to conduct target-oriented inversions at the level of the reservoir using interferometric redatuming techniques [43] and V(ib) tie wave-equation inversions with rock properties and attributes that characterize the reservoir [146], such as porosity, permeability [147] and certain lithology indicators [148], and by including (empirical) rock physical relations in targeted WEI with UQ.

V(ii)—Time-lapse inversions tied in with fluid flow. This project will be aimed at seismic monitoring of (un-) conventional production and is challenging because it will require integration with the physics of fluid flow and fracturing [149–152]. We will investigate how to use reservoir simulations as optimization constraints for time-lapse inversions and how to integrate these inversions with randomized time-lapse acquisitions that exploit shared information amongst the vintages [16–18].

V(iii)—Information retrieval with deep learning. Translating inversions to tangible information that drives decisions “Where to drill?” and “How to produce?” remains the “holy grail”. We will investigate the use of, and connections between our work, and deep learning with “group-invariant scattering” [153], which is linked to “lifting”. Deep learning is leading to unprecedented accuracies in computer vision [154] but is extremely data and computationally intensive (to which we will have access).

In summary. Integration of seismic inversion and reservoir characterization is essential to meet current challenges in conventional and unconventional settings that require lithology and other characterizations from seismic data to monitor fluid flow, time-lapse changes and to mitigate risks. Deep learning also gives us a unique opportunity to shed new light on these challenging problems. Project leaders. Felix, Ozgur, and Mark. Project team. New PhD 6 (V(ia),V(ib)), new PhD 7 (V(ii)), new PDF 4 (V(iii)).

VI—Randomized computations and data handling

Without our extensive experience in sophisticated optimization, object-oriented programming and scientific-computing techniques, we would not have become the world’s leading group in computational ES. To continue building on our strengths, we will work on:

VI(i)—Stochastic optimization. Like ML, ES has to contend with massive amounts of data, but unlike ML WEI also involves exceedingly large models and computationally expensive misfit and gradient calculations. By working with subsets of data (e.g., shots) and limited accuracy of wave simulations, significant progress has been made to control the cost of WEI. So far, this approach [47,82] has mostly been based on heuristics motivated by convex convergence analyses [155,156]. These results have provided fundamental insights but are of limited use because WEIs can be non-convex and can typically not be run to “convergence”. To address these issues, we will work: VI(ia) optimization schemes that maximize progress during the first iterations by adapting recent innovations [157]; VI(ib) extend this approach to non-convex problems [158] that work VI(ic) asynchronously, so that late arriving gradients still partake in the optimization [159–161], VI(id) adaptively, subsets of data that are strongly coupled to the model are selected with priority, and VI(ie) decentralized [162], and each machine works independently and only communicates locally to reduce communication. VI(if) To achieve additional performance improvements, we will also work on schemes that can work with cheap and inaccurate gradients (e.g., gradients based on simplified physics) interspersed with infrequent accurate gradient calculations [163]. Our aim is to achieve an order of magnitude improvement from these algorithms as in ML [164].

VI(ii)—Large-scale (convex) optimization algorithms and concrete (parallel) implementations remain important in accomplishing DNOISE’s research objectives. VI(iia) Aside from continued development of highly optimized \(\ell_1\)-norm solvers, we will VI(iib) further build on SVD-free nuclear norm minimizations using matrix factorizations [7], in support of our matrix completion, interferometric [31] and lifting methods [30]. The latter two are extremely challenging due to quadratic variables but offer new possibilities for solving non-convex problems such as “blind deconvolution” [30]. However, there is no guarantee we arrive at a global minimum with our factorization approach. VI(iic) By working with Ben, we will remove this non-uniqueness via proper initializations [118,165,166] that can be extended to tensors; VI(iid) come up with a method to determine the rank of the factorization; and VI(iie) further leverage and integrate nuclear and other matrix and trace-norm objectives in WEI.

VI(iii) Randomized algorithms. The sheer size of numerical problems in ES puts strains on even the largest HPC systems, which severely limits benefits from WEI. DNOISE has successfully employed randomized dimensionality reductions to speed-up data-intensive operations by working on small randomized subsets of random phase encoded [63–65,137] or randomly selected shots [66,76]. Both approaches have connections to randomized-trace estimation [64,65,167] and sketching [168,169], and have incorporated into randomized solvers and SVD’s [130,170–172]. Building on our successful fast imaging [54,57], we will enhance these randomized techniques to meet the challenges of 3D ES by extending sketching to other than \(\ell_2\)-norm data misfits and by including VI(iiia) \(\ell_1\)-norms [168] and VI(iiib) matrix norms [169].

VI(iv)—Scientific computing. SC offers the foundation for solving WEIs, including the reduced-space adjoint-state and full-space methods [83] for PDE-constrained optimization. The amount of data, the size of the models, and the mathematical structure of WEI make it one of the most challenging problems in SC. Therefore, we will exploit the mathematical properties and develop novel scalable techniques that respect matrix sparsity. In particular, we will formulate gradient computations as regularized least-squares problems. We will develop VI(iva) preconditioned iterative solvers for large-scale, ill-conditioned, indefinite systems with incomplete factorizations [125,126,173] and block-diagonal approximations; VI(ivb) alternative formulations for large-scale, saddle-point systems using augmented Lagrangian techniques [131,132]; interpretation of gradients as quasi-definite systems [133]; and VI(ivc) function space norms to measure residuals and regularization terms; VI(ivd) randomized linear algebra algorithms based on sub-sampling and other methodologies [174] for solving the linear systems that arise throughout the optimization.

VI(v)—Object-oriented programming for Big Data. The large data size and algorithmic complexity of computational ES call for hybrid, verifiable, object-oriented code design for abstract numerical linear algebra [175,176], modelling and inversion [177] and PDE-constrained optimization [178]. During DNOISE, we built this object-oriented framework using Mathworks’ Parallel Computing Toolbox. This environment significantly shortened development times, and allowed for greatly improved code integrity and productivity of our research team. We will VI(va) include parallel I/O; VI(vb) incorporate checkpointing to improve resilience of large parallel jobs; VI(vc) include physical units and VI(vd) weighted norms and inner products; VI(ve) exploit multiple levels of parallelization; VI(vf) expose external libraries for a hybrid computing; and VI(vg) explore new data-handling methods such as map-reduce [179] and graphical models [180]. We will also continue to integrate the professional seismic data processing software package Omega into our workflows.

In summary. New cutting edge technologies in Computer Science are essential to make advances in computational ES. We are confident that the combination of randomized linear algebra with state-of-the art optimization and software development sustains our ability to drive innovations. Project leaders. Chen (Co-PI), Felix, and Dominique (Co-PI), and Mark (Co-PI). Project team. Gerard Gorman (Co-I), Ben (Co-I), Ernie (PDF,VI(ia),VI(ib),VI(id), VI(iia),VI(iid)); new PhD 8 (VI(ib),VI(ic),VI(ie), VI(if)); new PDF 5 (VI(iib),VI(iic)); Rajiv (PhD, VI(iid);VI(iie)); Curt da Silva (PhD,VI(iiib)); New PhD 11 (VI(iiia),VI(ivd)); Ben Boucher (MSc,VI(va),VI(vb),VI(vc),VI(vd),VI(ve)); New PhD 12 (VI(vf),VI(vg))

Scientific computing infrastructure

The research of DNOISE III is critically dependent on access to powerful high-performance computing (HPC) hardware and software, for parallel code development and for technology validation on 3D field data. The exclusive access to a large $10 M (17k core) computing facility in Brazil—made available to us by BG Group as an in-kind contribution—gives DNOISE III a virtually unparalleled position to scale up to industrial-size 3D field data sets, develop practical workflows and further optimize our algorithms. Access to this resource, which is the largest machine in South America and ranks 95 on the global top 500 HPC list, will also allow us to conduct experiments and answer scientific questions that are normally unattainable. While this HPC resource puts us in a truly unique position, this machine is only available for scale-up and technology validation and cannot be used for the earlier development stages of our parallelized software. Therefore, it is absolutely essential that we maintain access to local HPC for the duration of this CRD during the early stages of our parallel code development. Without this local HPC resource at UBC, we will lose access to the facility in Brazil. Also, short job return times during code development are critical. We have made provisions to upgrade our 1120-core cluster at the midway point to ensure we meet the research goals of the CRD. The essential need for HPC access was also identified during the external review of EOAS and we are working with the UBC administration towards an integrated solution.

The Mathworks Parallel Computing Toolbox (MPCT) and MATLAB Distributed Computing Server (MDCS) have been key components in our scalable, object-oriented software framework. The International Inversion Initiative (III) has adopted our approach and has purchased a license to 4000 MPCT workers. This ranks among the largest parallel Matlab installations in the world. We are working with BG Group, the main industrial stakeholder for III, to develop parallel I/O for field data sets. To meet the increased interest towards the industrialization of our research findings, we have added in extra support for our HPC resources and and seismic data processing. Thanks to our partnership with III, our technical team at UBC will get support from HPC and PGS-lead processing teams in Brazil. With these unparalleled resources, we will be the first academic group capable of developing WEI prototypes into industry-strengths solutions.

Contribution to the training of highly-qualified personnel